We recently obtained a clock that flew on a Soyuz space mission.1 The clock, manufactured in 1984, contains over 100 integrated circuits on ten circuit boards. Why is the clock so complicated? In this blog post, I examine the clock's circuitry and explain why so many chips were needed. The clock also provides a glimpse into the little-known world of Soviet aerospace electronics and how it compares to American technology.

"Onboard space clock" from a Soyuz mission. The clock provides the time, an alarm, and a stopwatch.

The Soyuz series of spacecraft was designed for the Soviet space program as part of the race to the Moon. Soyuz first flew in 1966 and has made more than 140 flights over the past 50 years. The spacecraft (below) consists of three parts. The round section on the left is the orbital or habitation module, holding cargo, equipment, and living space. The descent module in the middle is the only part that returns to Earth; the astronauts are seated in the descent module during launch and reentry. Finally, the service module on the right has the main engine, solar panels, and other systems.

Soyuz TMA-7 spacecraft departing from the International Space Station, 2006. Photo from NASA.

The descent module contains the spacecraft's control panel (below).2 Note the digital clock in the upper left. Early Soyuz spacecraft used an analog clock, but from 1996 to 2002, the spacecraft used a digital clock.3 The digital clock was also used in the Mir space station. The clock was eliminated from later Soyuz spacecraft, which used two computer screens on the control panel in place of the earlier controls.

Control panel from a Soyuz spacecraft. The digital clock is in the upper left of the panel. The screen in the middle is a TV monitor. Photo from Stanislav Kozlovskiy, CC BY-SA 4.0.

A closer look at the clock

The diagram below shows the clock's labels translated into English. The clock has three functions: the time, an alarm, and a stopwatch. The "Clock of Current Time"5 mode shows the current Moscow time on the six upper LED digits, while "Announcement" shows the alarm time. The alarm can be set to a particular time; at that time, the clock triggers a relay activating an external circuit in the spacecraft.4 The clock is set using the "Correction" mode; digits are incremented using the "Enter" button. The lower half of the unit is the stopwatch; the bottom four LEDs display elapsed minutes and seconds. The lower pushbutton stops, starts, or resets the stopwatch.6 Finally, the power switch at the right turns the clock on.

Front of the clock. The red text is the translation of the Russian labels into English.

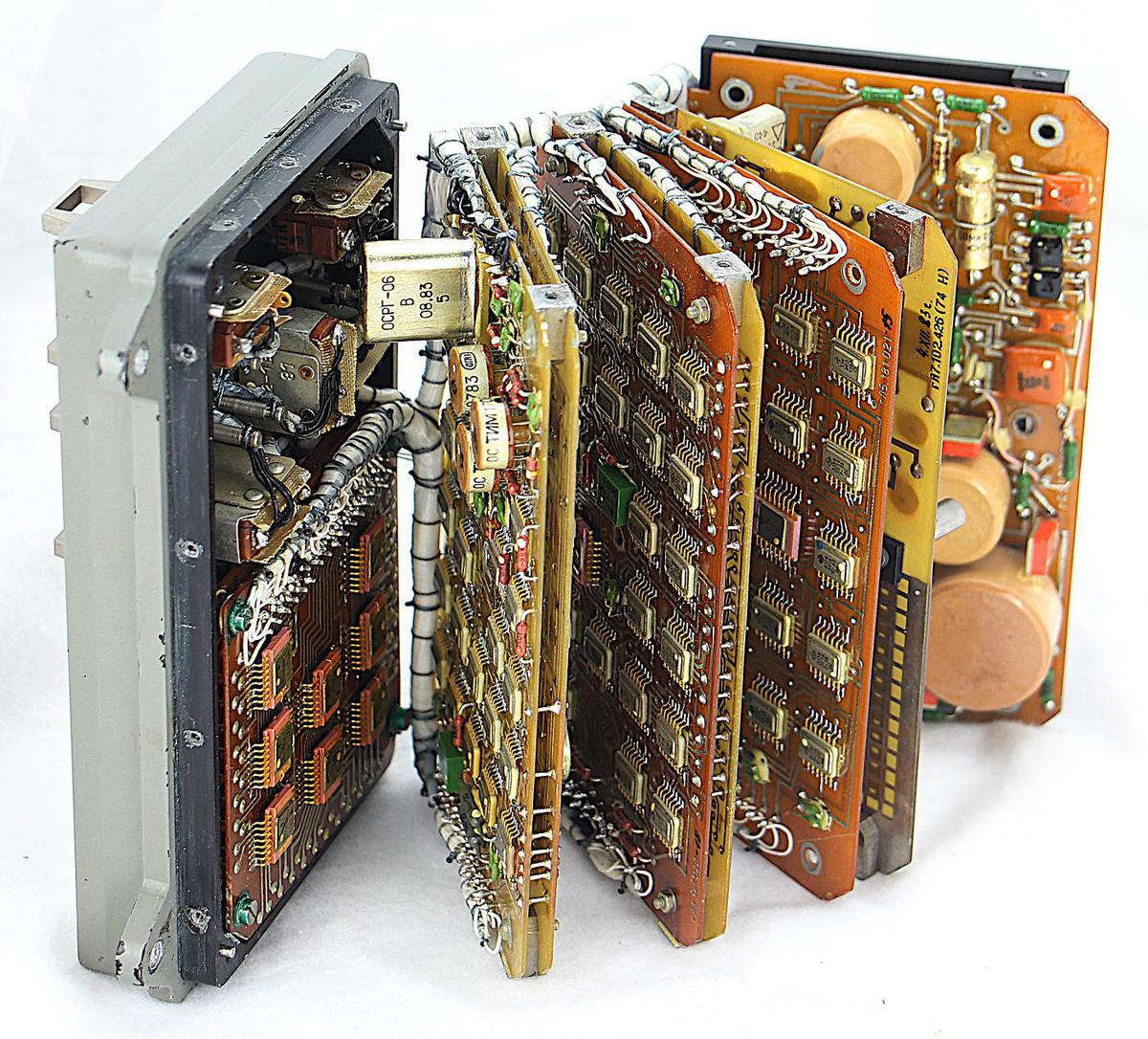

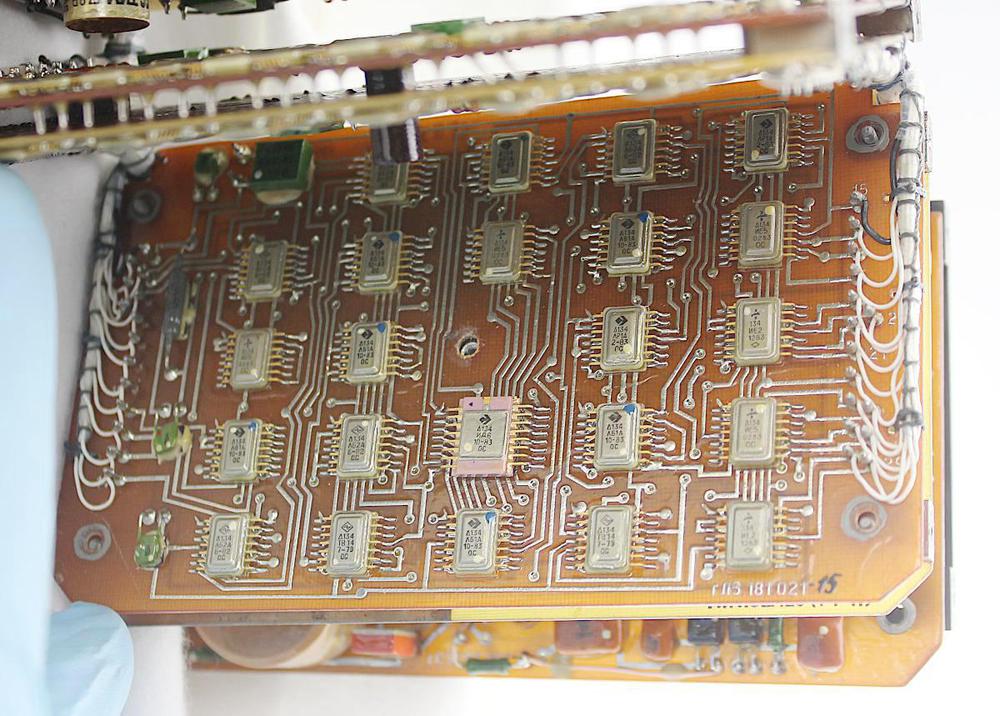

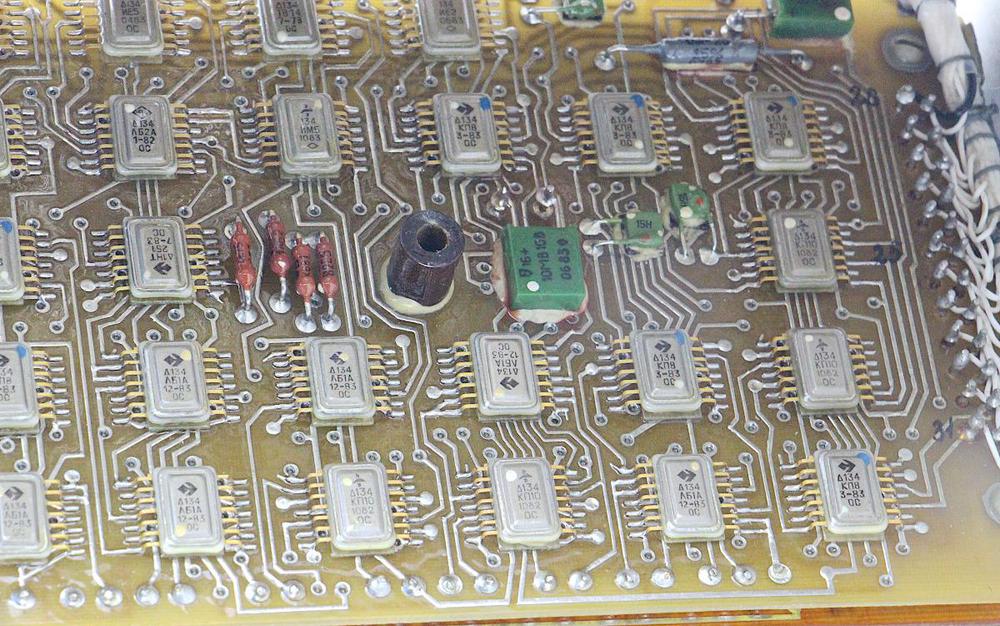

We wanted to see what was inside the clock, of course, so Marc unscrewed the cover and removed it from the clock. This revealed a dense stack of circuit boards inside. The clock was much more complex than I expected, with ten circuit boards crammed full of surface-mount ICs and other components. The components are mounted on two-layer printed-circuit boards, a common construction technique. The boards use a mixture of through-hole components and surface-mount components. That is, components such as resistors and capacitors were mounted by inserting their leads through holes in the boards. The surface-mount integrated circuits, on the other hand, were soldered to pads on top of the board. This is more advanced than 1984-era American consumer electronics, which typically used larger through-hole integrated circuits and didn't move to surface-mount ICs until the late 1980s. (American aerospace computers, in contrast, had used surface-mount ICs since the 1960s.)

Space clock from Soyuz with the cover removed.

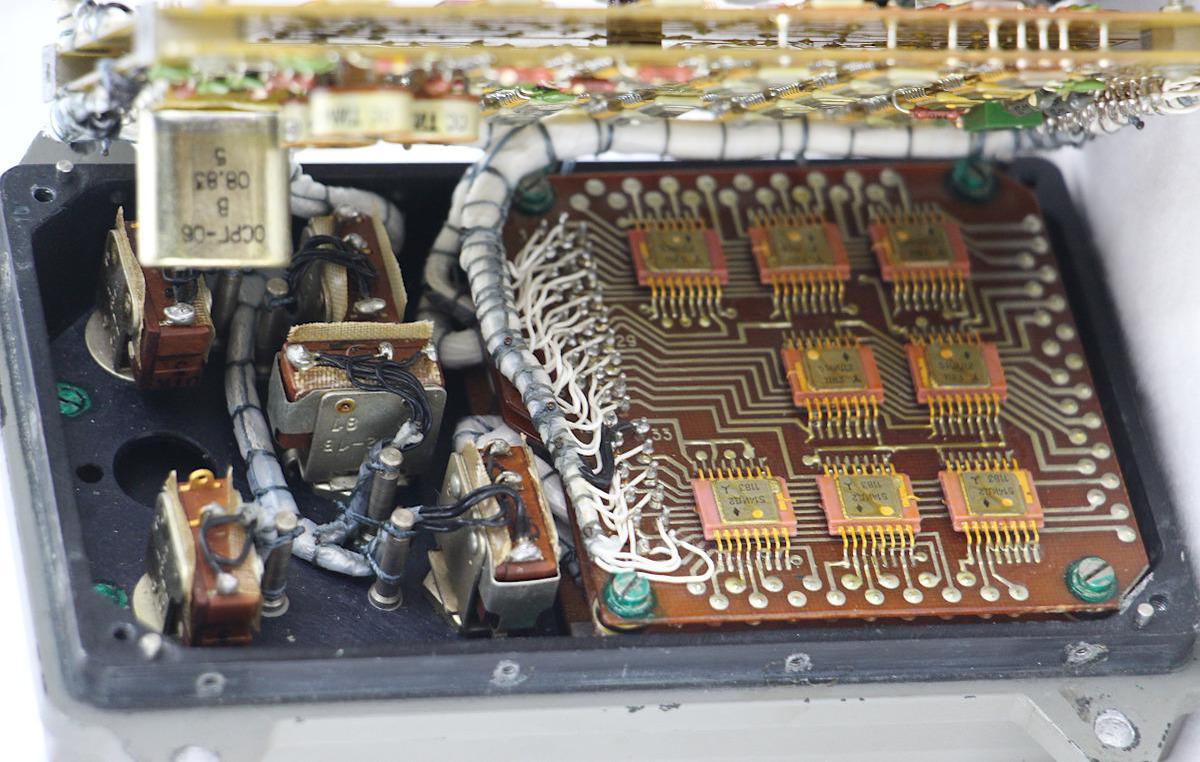

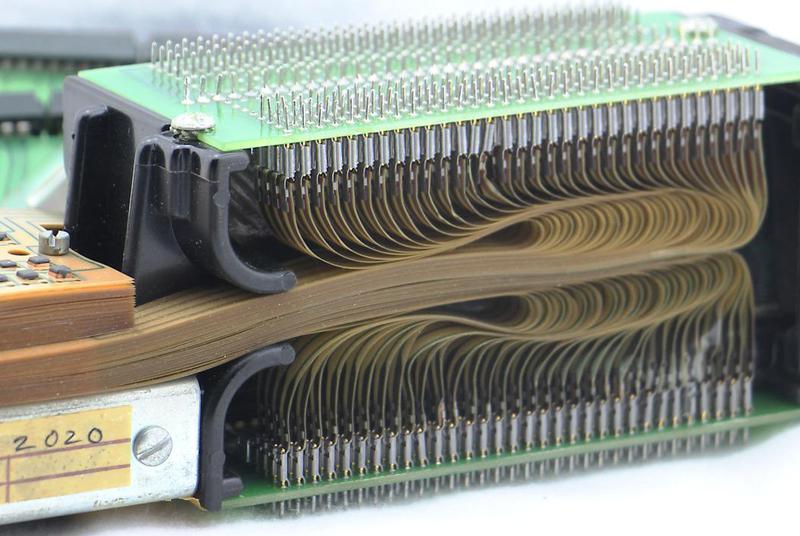

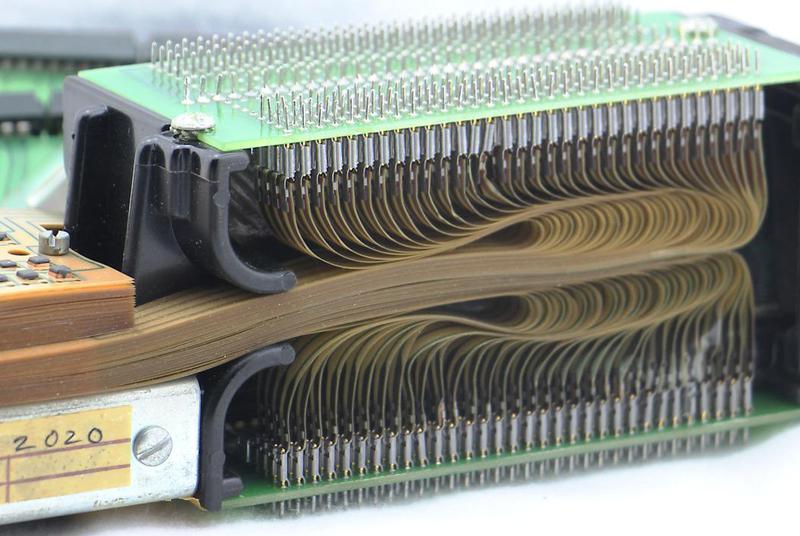

One interesting feature of the clock is that the boards are connected by individual wires that are bundled into wiring harnesses (below). (I expected the boards to plug into a backplane, or be connected by ribbon cables.) The boards have rows of pins along the sides, with wires soldered to these pins. These wires were gathered into bundles, wrapped in plastic, and then carefully laced into wiring harnesses that were tied to the boards.

The clock has point-to-point wires, wrapped into neat harnesses.

At first, we thought that further disassembly of the clock would be impossible without unsoldering all the wires, but then we realized that the wiring harnesses were designed so the boards could be opened like a book (see below). This allowed us to examine the boards more closely. Inconveniently, some pairs of boards were soldered together at the front by short wires, so we couldn't see both sides of these boards.

The wiring bundles are arranged so the boards can swing apart.

In the photo above, you can see the numerous integrated circuits in the clock. These are mostly 14-pin "flat pack" integrated circuits in metal packages, unlike contemporary American integrated circuits which were usually packaged in black epoxy. There are also some 16-pin integrated circuits, encased in pink ceramic.

The circuitry inside

The next step was to examine the circuitry in more detail, which I'll discuss starting at the back of the clock. A 19-pin connector7 linked the clock to the rest of the spacecraft. The spacecraft provided the clock with 24 volts through this connector, as well as external timing pulses and stopwatch control signals. The clock could signal the spacecraft through relay contacts when the alarm time was reached.

This 19-pin connector interfaces the clock to the spacecraft.

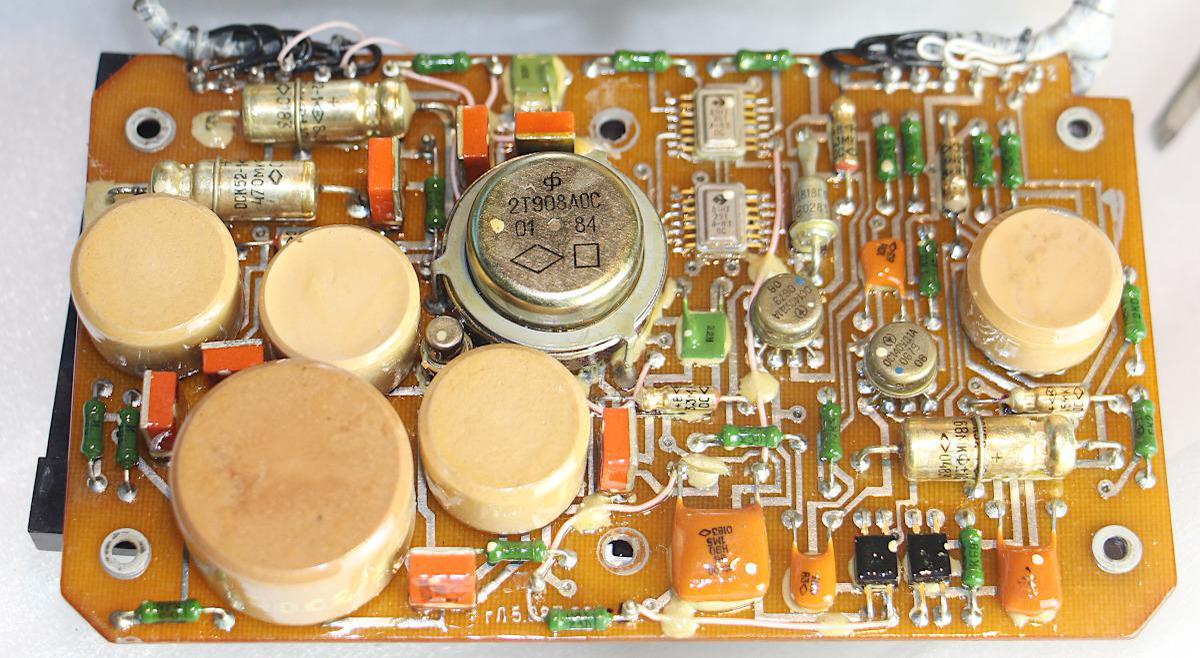

The two circuit boards at the back of the clock are the power supply, which was more complex than I expected. The first board (below) is a switching power supply that converts the spacecraft's 24-volt power to the 5 volts required by the integrated circuits. The round ceramic components are inductors, ranging from simple coils to complex 16-pin inductors. The control circuitry includes two op amps in metal can packages. Two other packages that look like integrated circuits each hold four transistors. Next to them, a bullet-shaped Zener diode sets the output voltage level. The large round switching power transistor is visible in the middle of the board. You might expect the power supply to be a simple buck converter. However, the power supply uses a more complicated design to provide electrical isolation between the spacecraft and the clock. I'm not sure, though, why isolation was necessary.8

Board 1 implements a switching power supply to produce 5 volts for the clock.

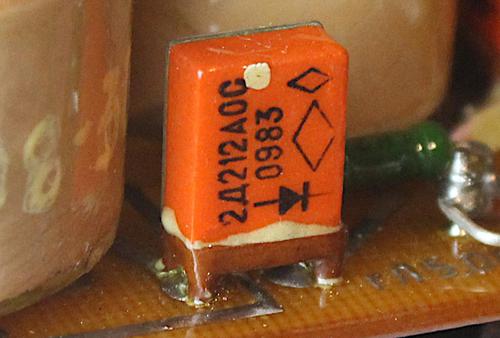

Many of the components in the power supply look different from American components. While American resistors are usually labeled with colored bands, the Soviet resistors are green cylinders with their values printed on them. The Soviet diodes have orange rectangular packages (below), unlike the usual cylindrical American diodes. The power transistor in the middle of the board is round, lacking the metal flanges of American power transistors in "TO-3" packages. I don't think the Soviet packaging is better or worse, but it's interesting to see how components from the two countries diverged.

The power supply uses 1 amp diodes in rectangular orange packages. The "OC" indicates a higher-quality military part.

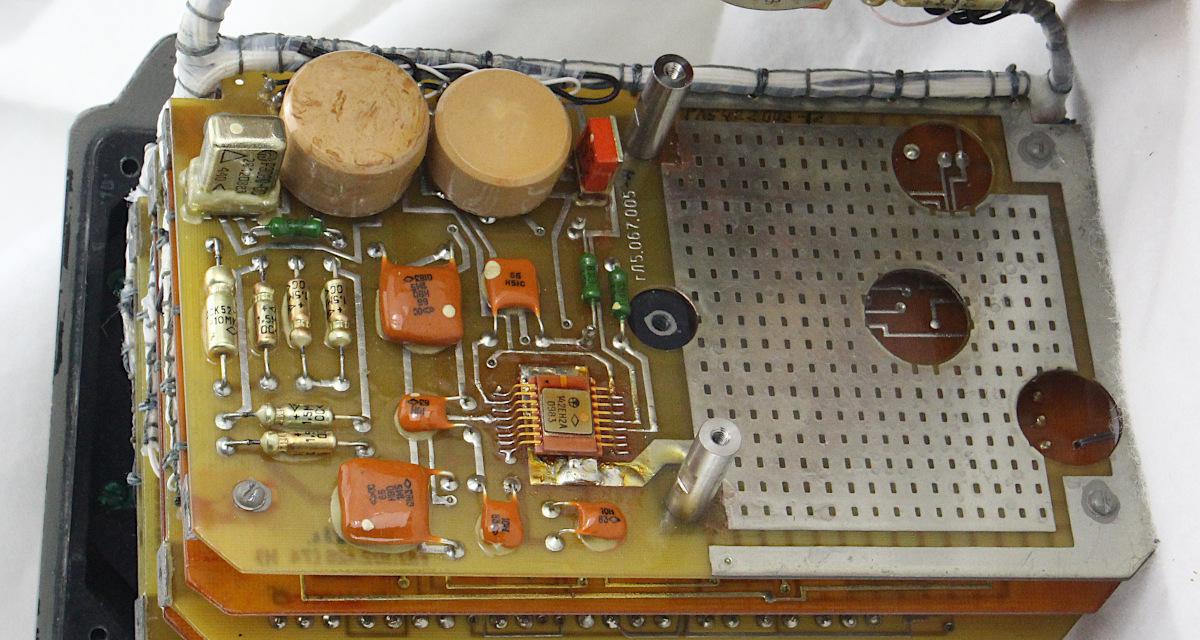

The second board is also part of the power supply, but is much simpler. It has inductors and capacitors to filter the power, as well as a linear voltage regulator chip (pink) to produce 15 volts for the op amp ICs in the first board. The voltage regulator chip has two large metal tabs on the bottom that were soldered to the circuit board to dissipate heat. Strangely, the board has three large holes in the right side. The obvious explanation would be that these holes made room for tall components, a situation that arises on another board. However, there are no components that fit the holes on this board. Thus, I suspect this board was originally designed for a different device and reused in the clock.

Power supply board 2 is half-empty, with the right half apparently acting as a heat sink.

The remaining boards are filled with digital logic integrated circuits. Board 3 (below) and board 5 (which is similar) implement the current time and alarm time functions. Each board contains six BCD counter chips for the six digits (hours, minutes, and seconds).9 In addition, each digit counter requires a logic chip to control when it is incremented and another chip to control when it is reset, depending on whether the clock is being set or is running. (This is one reason why so many chips are required.) The pink chip on the board controls which digit is modified when setting the clock.10

Board 3 is filled with digital logic integrated circuits. Pins on either side connect the board to the wiring harnesses.

Board 4 (below) has two functions. First, it controls whether the clock displays the current time or the alarm time. This is implemented with a selection chip for each digit. Second, the board signals the spacecraft when the current time reaches the alarm time. This is implemented with multiple chips to step through each digit, compare the times, and determine if they match. Thus, even though the functions of this board seem simple, they require a whole board of chips. The connections at the bottom of the board link board 4 to board 5. The board is connected to board 3 through the wiring harness.

Board 4 selects between the current time and the alarm time. It also compares the two values to determine when the alarm time has been reached.

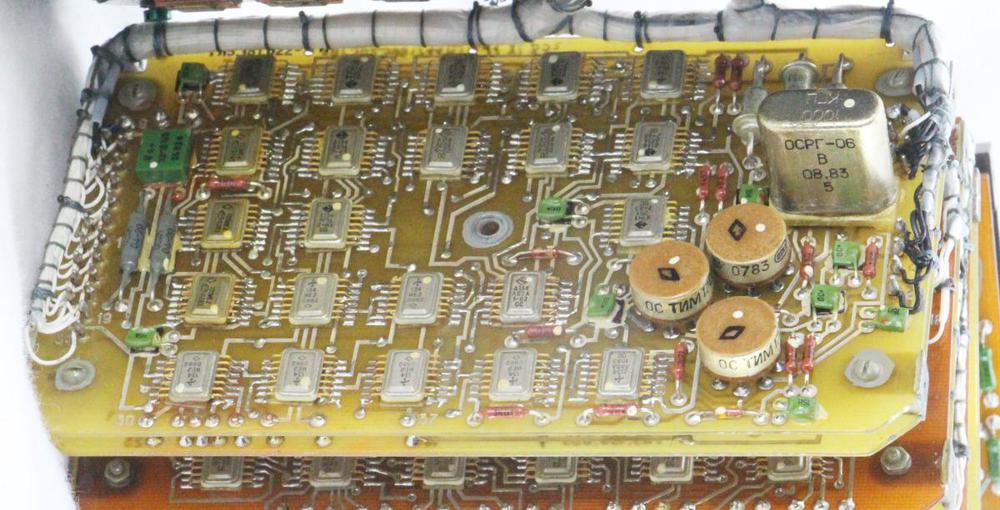

Some of the boards have more circuitry than just digital logic. For instance, boards 6 and 7 have pulse transformers to electrically isolate the control signals fed into the clock through the 19-pin connector. (In modern circuits, this role would be performed by an optoisolator.) These transformers look a bit like mushrooms or miniature water towers, and can be seen in the photo below. Board 7 also has a quartz crystal, the metal rectangle below.11

Board 7 has a 1 MHz crystal that provides the timing signals for the clock. It also has three round pulse transformers that isolate the control signals from the spacecraft.

The two functions of board 7 (below) are to generate the clock's timing pulses and to implement the stopwatch. The quartz crystal generates accurate 1 megahertz pulses. These pulses are reduced to one-second pulses by six BCD counters; each counter chip divides the frequency by 10. These timing pulses are used by the rest of the clock. To implement the stopwatch, the board has four BCD counters for the four digits. It also has control logic to start, stop, and reset the stopwatch. The three pulse transformers allow the spacecraft to control the stopwatch when certain events happen. Additional chips handle these mode changes.

Board 7 contains the stopwatch circuitry, as well as the quartz crystal that generates timings for the whole clock. Wires along the front connect the board to Board 6.

Boards eight and nine drive the LED displays. Each LED digit requires a chip to illuminates the appropriate segments of the 7-segment LED based on the BCD (binary-coded decimal) value. These BCD-to-7-segment driver chips are the pink 16-pin chips on the board.12 Since the clock displays 10 digits in total, 10 driver chips are used. Eight driver chips are on board 8, while board 9 has two chips along with numerous current-limiting resistors for the LEDs. The switches to control the clock are also visible in the photo below.

Board 8 is an LED driver board holding eight 7-segment driver chips. Board 9 (underneath) has two more driver chips and many resistors.

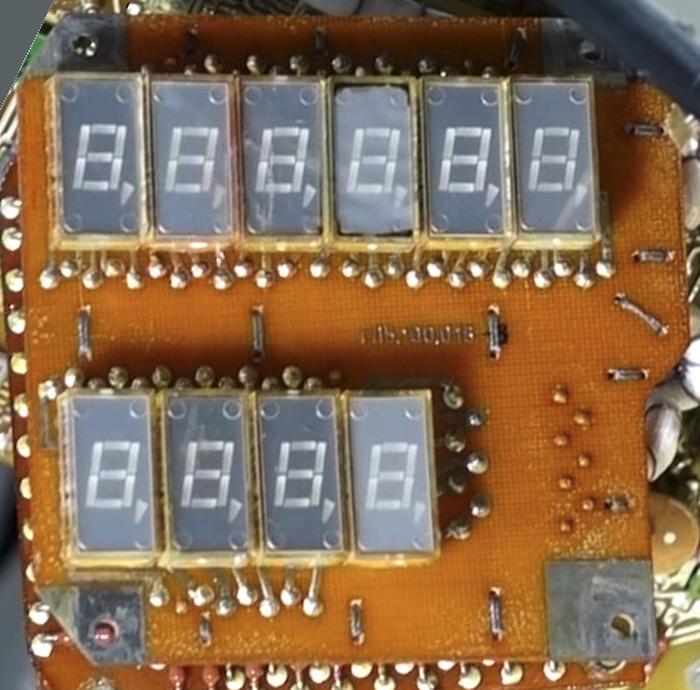

Finally, board 10 (below) holds the ten LED digits. Each digit consists of a seven-segment LED, along with a comma. I think one of the commas is wired up to indicate something; we'll find out what when we power up the clock.

Board 10 holds the ten LED digits. Photo from Marc Verdiell.

Soviet integrated circuits

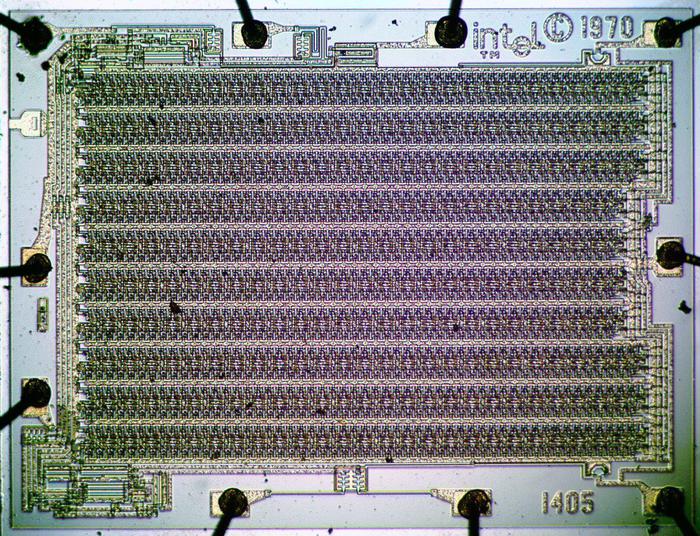

Next, I'll discuss the integrated circuits used in the clock. The clock is built mostly from TTL integrated circuits, a type of digital logic that was popular in the 1970s through the 1990s. (If you've done hobbyist digital electronics, you probably know the 7400-series of TTL chips.) TTL chips were fast, inexpensive and reliable. Their main drawback, however, was that a TTL chip didn't contain much functionality. A basic TTL chip contained just a few logic gates, such as 4 NAND gates or 6 inverters, while a more complex TTL chip implemented a functional unit such as a 4-bit counter. Eventually, TTL lost out to CMOS chips (the chips in modern computers), which use much less power and are much denser.Because each chip in the Soyuz clock didn't do very much, the clock required many boards of chips to perform its functions. For example, each digit of the clock requires a counter chip, as well as a couple of logic chips to increment and clear that digit as needed, and a chip to drive the associated 7-segment LED display. Since the clock displays 10 digits, that's 40 chips already. Additional chips handle the buttons and switches, implement the alarm, keep track of the stopwatch state, run the oscillator, and so forth, pushing the total to over 100 chips.

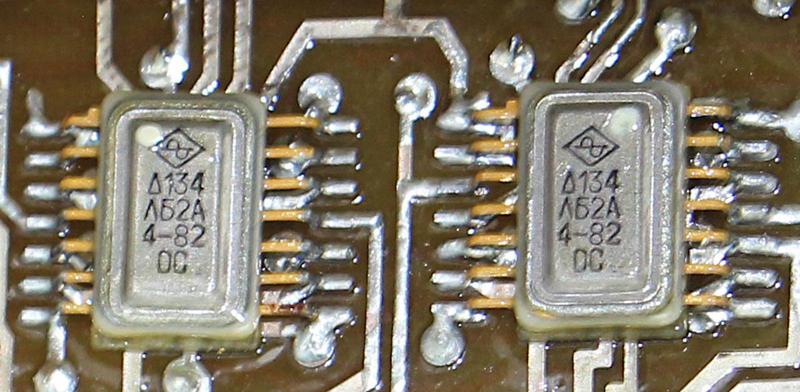

One nice thing about Soviet ICs is that the part numbers are assigned according to a rational system, unlike the essentially random numbering of American integrated circuits.13 Two letters in the part number indicate the function of the chip, such as a logic gate, counter, flip flop, or decoder. For example, the IC below is labeled "Δ134 ΛБ2A". The series number, 134, indicates the chip is a low-power TTL chip. The "Л" (L) indicates a logic chip (Логические), with "ЛБ" indicating NAND/NOR logic gates. Finally, "2" indicates a specific chip in the ЛБ category. (The 134ЛБ2 chip's functionality is two 4-input NAND gates and an inverter, a chip that doesn't have an American counterpart.) 14

Two integrated circuits inside the clock.

The logos on the integrated circuits reveal that they were manufactured by a variety of companies. Some of the chips in the clock are shown below, along with the name of the manufacturer and its English translation. More information on Soviet semiconductor logos can be found here and here.

By looking up the logo on each chip, the manufacturer can be determined.

Comparison with US technology

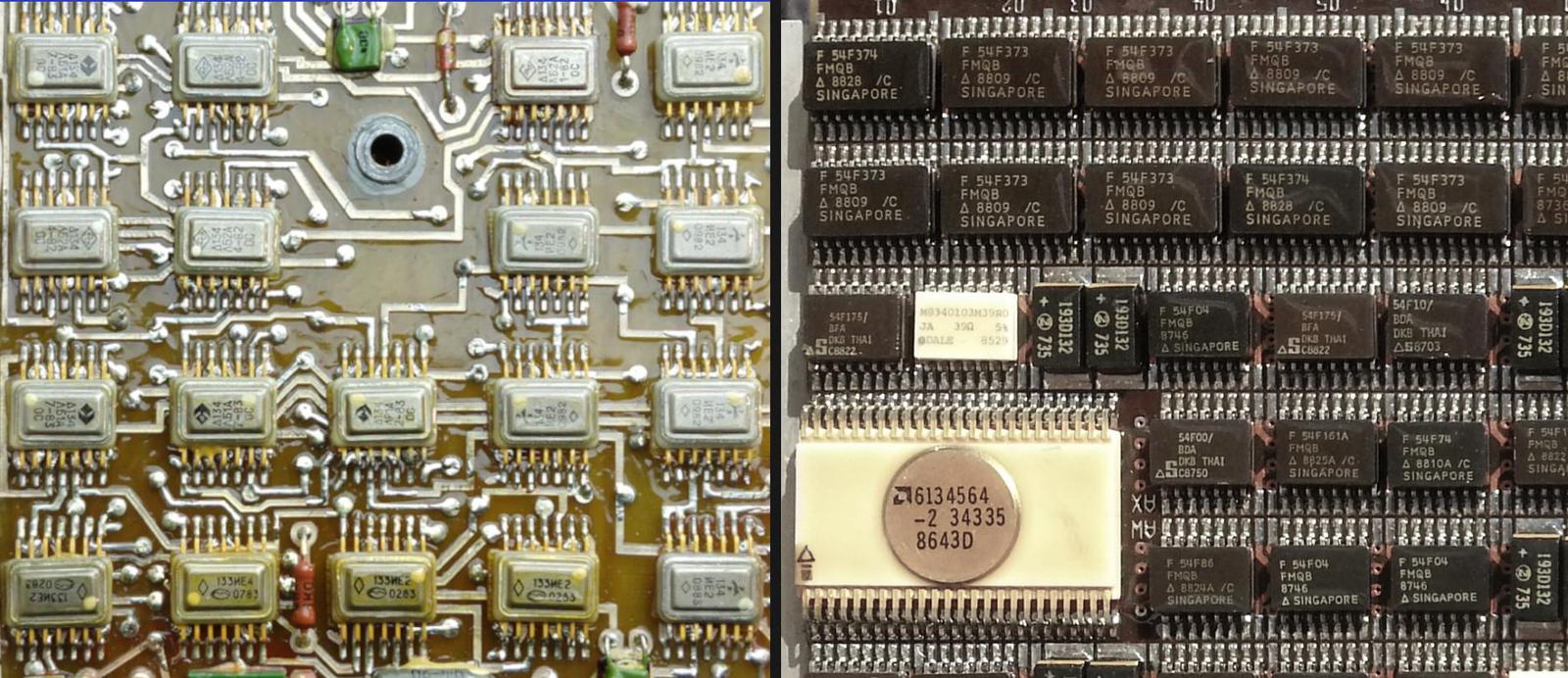

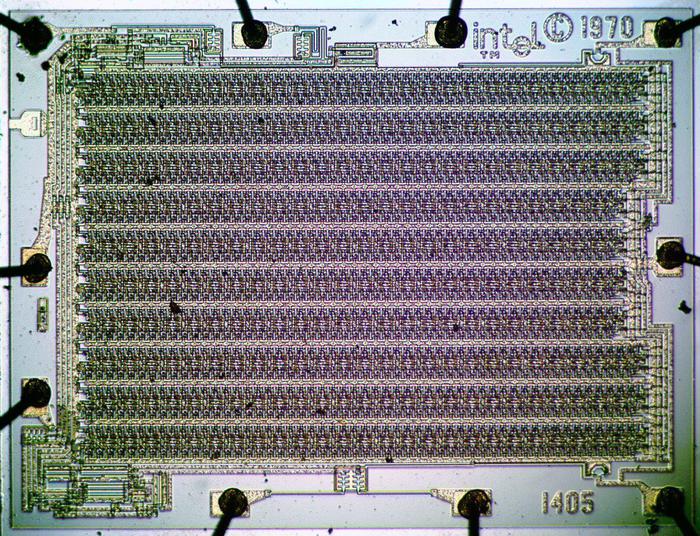

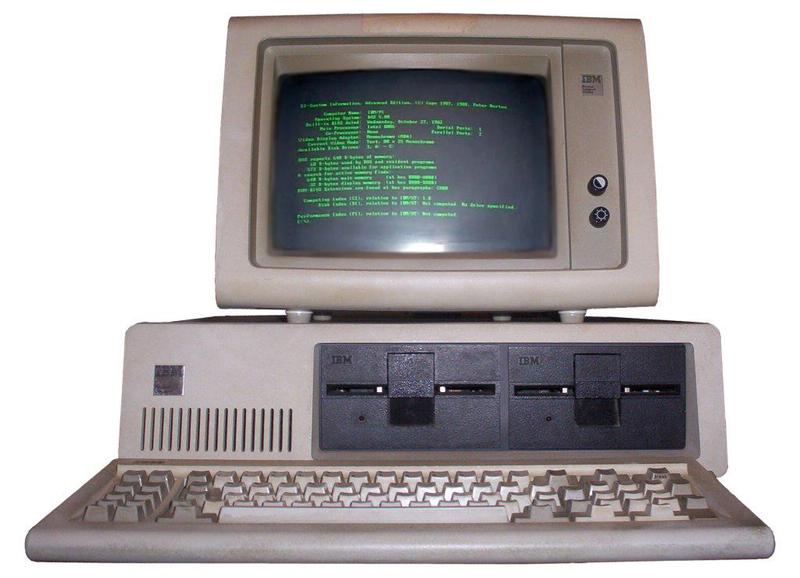

How does the Soyuz clock compare with US technology? When I first looked at the clock I would have guessed it was manufactured in 1969, not 1984, based on the construction and the large number of simple flat-pack chips. In comparison, American technology in 1984 produced the IBM PC/AT and the Apple Macintosh. It seemed absurd for the clock to use boards full of TTL chips a decade after the US had produced single-chip digital watches.16 However, the comparison turned out to be not so simple.To compare the Soyuz clock with contemporary 1980s American space electronics, I looked at a board from the Space Shuttle's AP-101S computer.17 The photo below shows circuitry from the Soyuz clock (left) and the Shuttle computer (right). Although the Shuttle computer is technologically more advanced, the gap was smaller than I expected. Both systems were built from TTL chips, although the Shuttle computer used a faster generation of chips. Many Shuttle chips are slightly more complex; note the larger 20-pin chips at the top of the board. The large white chip is significantly more complex; it is an AMD Am2960 memory error correction chip. The Shuttle's printed-circuit board is more advanced, with multiple layers rather than two layers, allowing the chips to be packed 50% more densely. At the time, the USSR was estimated to be about 8 to 9 years behind the West in integrated circuit technology;15 this is in line with the differences I see between the two boards.

The Soyuz clock board (left) and Space Shuttle computer board (right), to the same scale. Both use surface-mount TTL chips.

What surprised me, though, was the similarities between the Shuttle computer and the Soviet clock. I expected the Shuttle computer to use 1980s microprocessors and be a generation ahead of the Soyuz clock, but instead the two systems both use TTL technology, and in many cases chips with almost identical functionality. For example, both boards use chips that implement four NAND gates. (See if you can find the 134ΛБ1A chip on the left and the 54F00 on the right.)

Conclusion

Why does the Soyuz clock contain over 100 chips instead of being implemented with a single clock chip? Soviet integrated circuit technology was about 8 years behind American technology and TTL chips were a reasonable choice at the time, even in the US. Since each TTL chip doesn't do very much, it takes boards full of chips to implement even something simple like a clock.The next step will be to power up the clock and see the clock in operation. I've been studying the power supply so we can make this happen. I plan to write more about the power supply and other parts of the clock, so follow me @kenshirriff for details. also have an RSS feed. Until then, you can watch Marc's video showing the disassembly of the space clock:

Notes and References

- CuriousMarc obtained the clock from an auction and it was advertised as flown to space, but I don't know which mission it was flown on. The date codes on the components inside the clock are mostly from 1983, with one from 1984, so the clock was probably manufactured in 1984. The Russian name for the clock is "Бортовые Часы Космические" (Onboard Space Clock), which is abbreviated as "БЧК". ↩

- The photo of the Soyuz console was mislabeled as from Soyuz 7K-VI. However, that mission was in the 1960s and the Soyuz-7K console was much different. A photo of the Soyuz-7K console is in this Russian article. ↩

- The digital clock was used in the Soyuz-TM version of the spacecraft. This version of the console was known as Neptune (Нептун). For details on Soyuz consoles, see The Integrated Information Display System for the Soyuz-TMA. Two Russian documents are this and (this. The analog clock can be seen in a Scott Manley video here and in some photos by Steve Jurvetson. ↩

- Most of the description of how the clock works is based on my reverse engineering, so I don't guarantee that everything in this post is accurate. When we power up the clock, I'll find out what I got wrong :-) ↩

- The clock has the label "ЧТВ", which is an abbreviation for "Часы Текущего Времени". The Soyuz Crew Ops Manual translates this as "Clock of Current Time". ↩

- The Soyuz Crew Ops Manual has some information on the clock on page 35. According to the manual, the stopwatch is controlled automatically during the propulsion system engine burn timing, to measure the time between the Engine Fire command and the Engine Cut Off command. It also automatically measures the time during descent until contact. ↩

- The 19-pin connector was a standard Soviet military connector of type RS19TV (РС19ТВ in Cyrillic). I was able to find a matching connector on eBay, which we will use for powering the clock. ↩

- Cell-phone chargers, for instance, use isolated power supplies for safety, to protect the user from the dangerous 120-volt line voltage. The clock, however, is powered with 24 volts, so there's no obvious reason for electrical isolation. (The Apollo Guidance Computer's power supply, for example, used a non-isolated switching power supply.) ↩

- The clock uses a BCD counter chip for each digit with some exceptions. The top hours digit only goes to "2" (for a 24-hour clock), so two flip flops are used instead of a counter. The top digit for minutes and seconds needs to roll over at 6 (i.e. 60 seconds/minutes), so the clock uses a divide-by-12 chip similar to the 7492 chip. (The chip can be configured to roll over at 6 rather than 12.) ↩

- The pink chip on board 3 is a К134ИД6 decimal decoder, which selects one of 10 outputs based on the 4-bit BCD value fed into it. (The part number ИД indicates a decoder, Дешифраторы.) This chip is a copy of the American 74L42 chip. For some reason, the 16-pin integrated circuits are in pink ceramic packages, while the more common 14-pin integrated circuits are in metal packages. ↩

- The Soyuz Crew Ops Manual (page 35) specifies the clock's accuracy as 30 seconds per day, which isn't very good. In comparison, a low-cost Timex quartz watch from the early 1970s was accurate within 15 seconds per month. According to the manual, the clock could be synchronized to external time pulses. During launch/injection and autonomous orbital flight phases, the clock was synchronized to the Program-Timing Control Equipment (АПВУ). It could also be synchronized to the TV unit (KЛ110). ↩

- LED displays often use multiplexing, where one driver chip is shared across all the digits and the display rapidly cycles through the digits. This reduces the number of chips and resistors required. I'm not sure why the clock uses separate drivers instead of multiplexing. ↩

- For more information on Soviet integrated circuits, including the ones used in the clock, see the databook Интегральные микросхемы и их зарубежные аналоги (Integrated circuits and their foreign counterparts). ↩

- The Soviet IC designation system is described in detail on Wikipedia. There are a few complications that make a chip's designation different from the labels printed on the chip. Because Л and П (Cyrillic L and P) look similar on small chips, the chip labels use Λ (Greek L) in place of Л (Cyrillic L). The Greek D (Δ) may replace Cyrillic D (Д) to avoid confusion with Cyrillic А. Moreover, names for commercial chips start with K, unlike the military chips used in the clock. Thus, a chip labeled "Δ134 ΛБ2A" appears in databooks and on the web under the name "К134ЛБ2". ↩

- Two CIA reports (1974 and 1986) provide information on the lag between Soviet IC technology and Western technology. ↩

- US manufacturers implemented clocks on a single chip in the early 1970s. Mostek introduced a single-chip digital clock chip in 1972, the Mostek MM5017. In 1974, Intel introduced a watch using a low-power CMOS chip, the Intel 5810 In other words, the Soyuz clock could (roughly) have been replaced with a single chip a decade earlier. ↩

- The AP-101S computer in the Space Shuttle was part of IBM's System/4π line of avionics computers. This 64-pound computer was built from TTL integrated circuits, using the 74F00 series (Fairchild's FAST line) for improved performance. (Its memory, however, was built from high-capacity CMOS chips.) The AP-101S computer was an updated version of the AP-101B used in the earlier Space Shuttle flights. (See The new AP101S general-purpose computer (GPC) for the space shuttle and Space Shuttle Avionics Upgrade.)

At first, it surprised me that they designed both Shuttle computers from low-complexity TTL chips, but it made sense when the design of the earlier AP-101B computer started in 1972. Back in the 1970s, minicomputers were commonly built from TTL chips because microprocessors were new and much slower than TTL. The first Shuttle computer achieved a speed of 0.42 MIPS. This performance was respectable in 1972 but poor by 1981, when the Shuttle first flew.

To improve performance, a redesign of the computer started in 1982. The updated AP-101S computer stuck with TTL, so its performance improved only moderately, to 1.27 MIPS, slightly slower than the Motorola 68010 (1982) which ran at 2.4 MIPS. Unfortunately, the gap between TTL computers and microcomputers got exponentially worse, following Moore's law. By 1991, when the AP-101S first flew, the Motorola 68040 ran at 44 MIPS. And by the end of the Shuttle program in 2011, the Intel Core i7 processor ran at 100,000 MIPS, many orders of magnitude faster than the Shuttle computer.

So why did the Space Shuttle use mostly-obsolete TTL technology in the 1980s redesign? One reason was backward compatibility. Since the first Shuttle computer used the proprietary IBM 4π architecture, it couldn't be replaced by an off-the-shelf microprocessor. Reliability was another motivation for TTL. Commerical microprocessors weren't designed for the reliability needs of space systems and lacked features such as radiation resistance and parity-protected caches. Finally, the aerospace development cycle is very long; although the Shuttle computer redesign started in 1982, the computer wasn't used on a flight until 1991 and remained in use until 2011. The point is that there were reasons to build aerospace systems from TTL, even though microprocessors were much faster, more compact, and lower power. ↩

Understanding and repairing the power supply from a 1969 analog computer

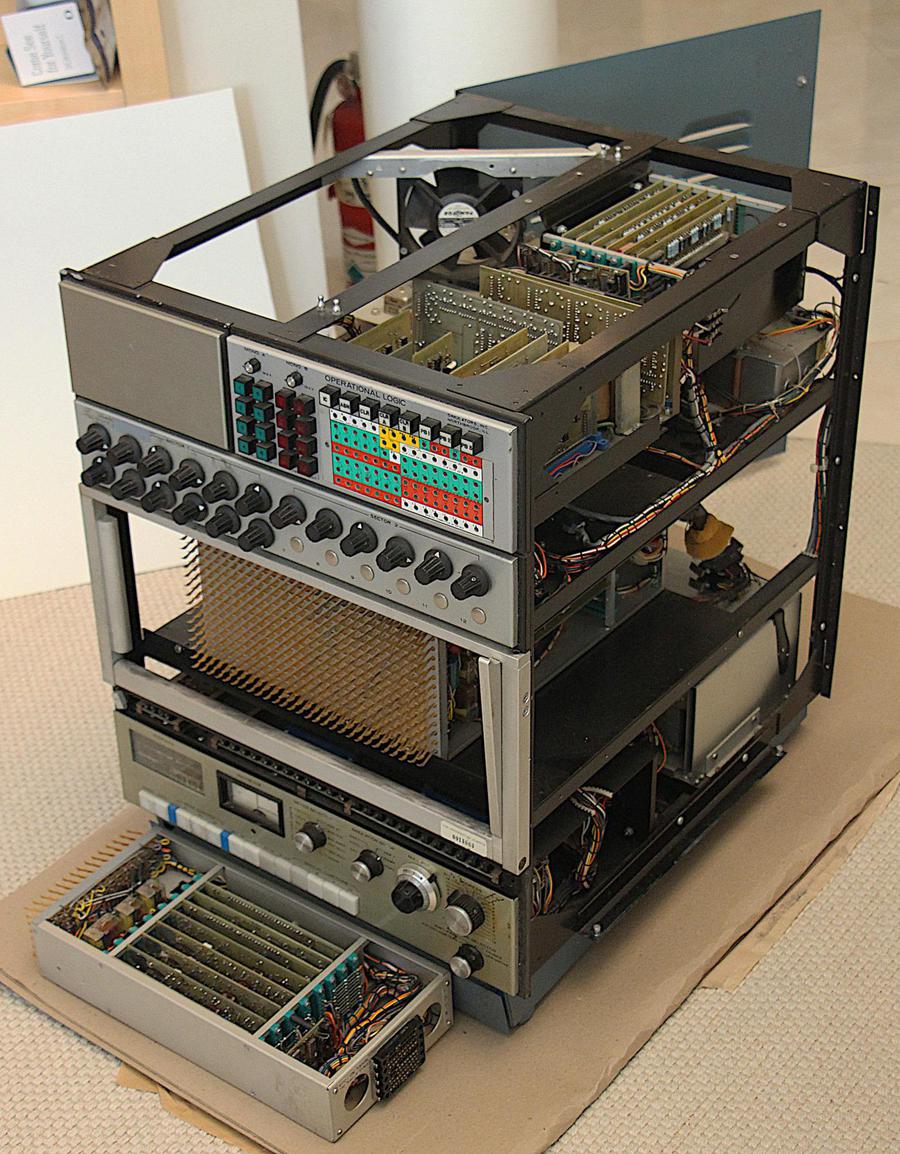

We recently started restoring a vintage1 analog computer. Unlike a digital computer that represents numbers with discrete binary values, an analog computer performs computations using physical, continuously changeable values such as voltages. Since the accuracy of the results depends on the accuracy of these voltages, a precision power supply is critical in an analog computer. This blog post discusses how this computer's power supply works, and how we fixed a problem with it. This is the second post in the series; the first post discussed the precision op amps in the computer.

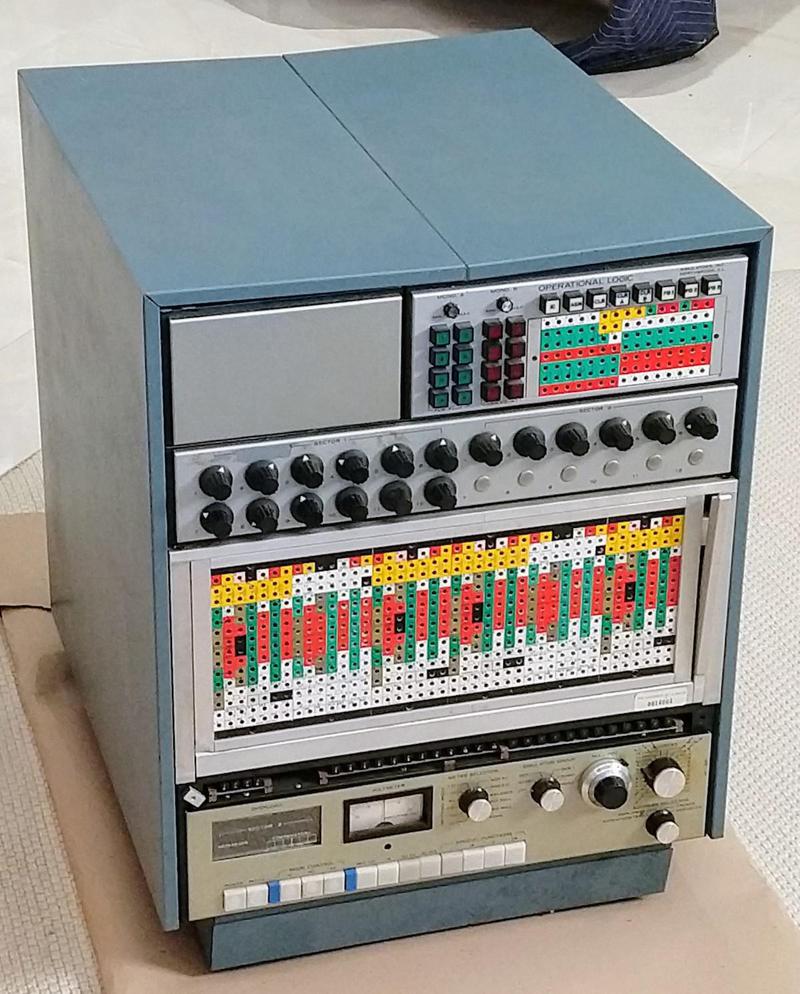

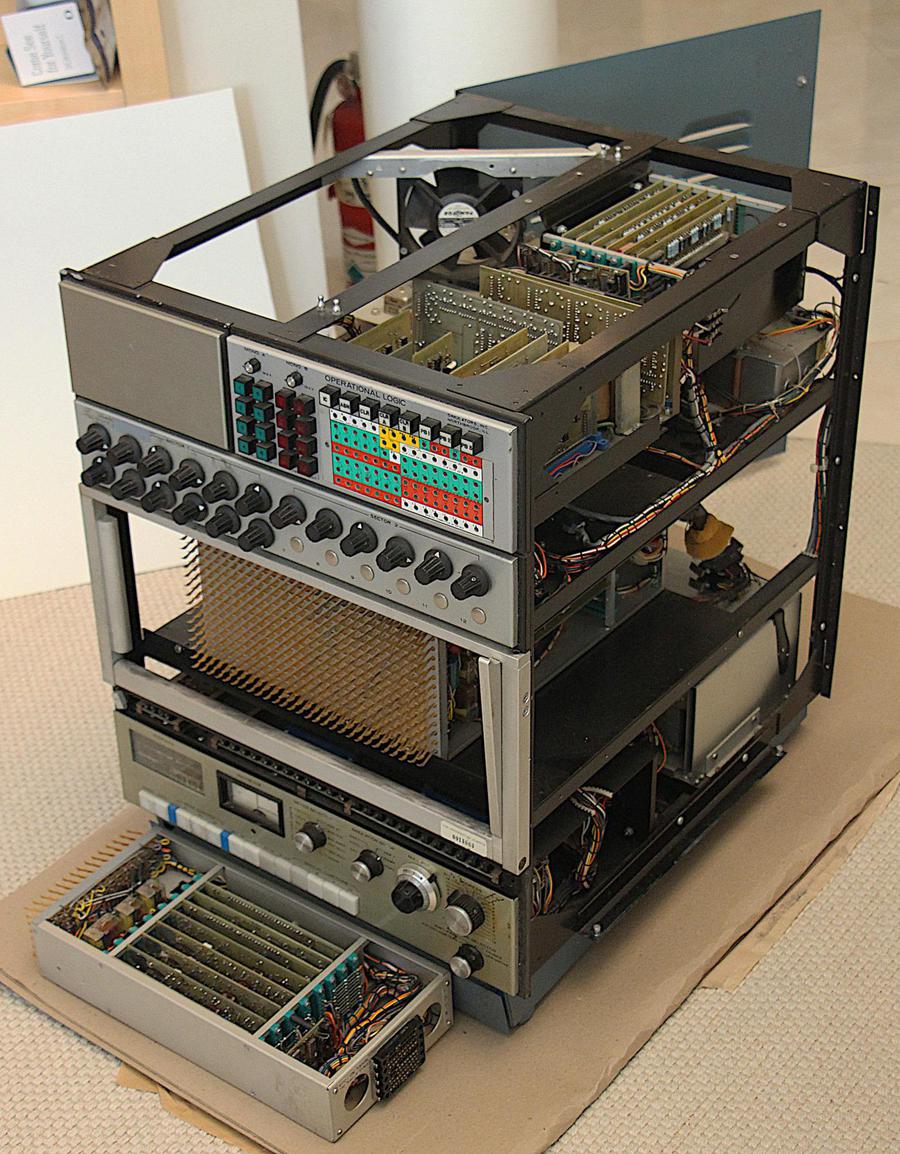

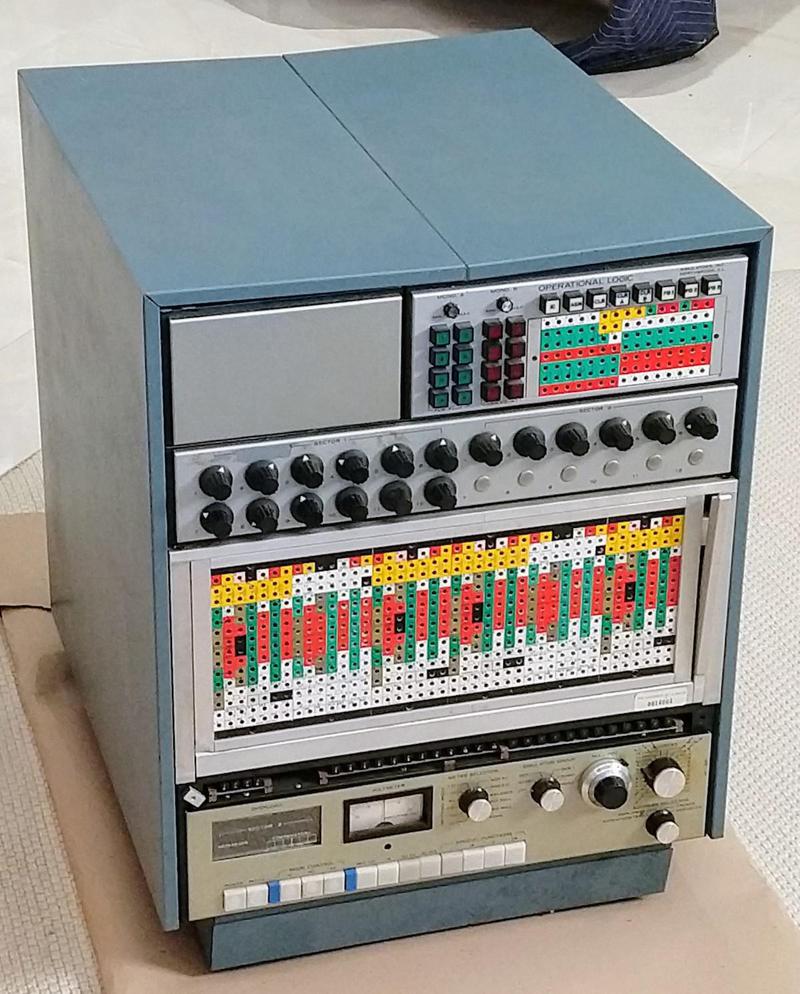

Analog computers used to be popular for fast scientific computation, especially differential equations, but pretty much died out in the 1970s as digital computers became more powerful. They were typically programmed by plugging cables into a patch panel, yielding a spaghetti-like tangle of wires. In the photo above, the colorful patch panel is in the middle. Above the patch panel, 18 potentiometers set voltage levels to input different parameters. A smaller patch panel for the digital logic is in the upper right.

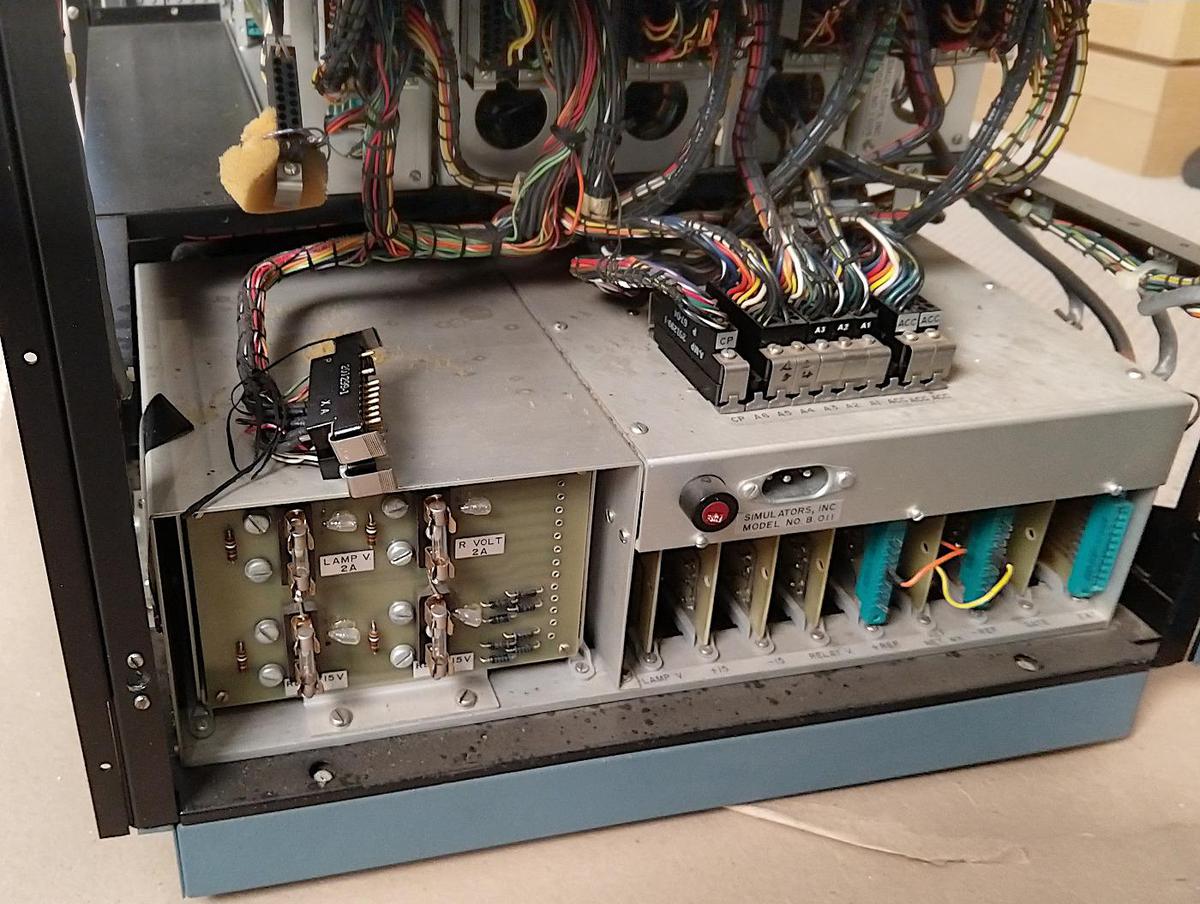

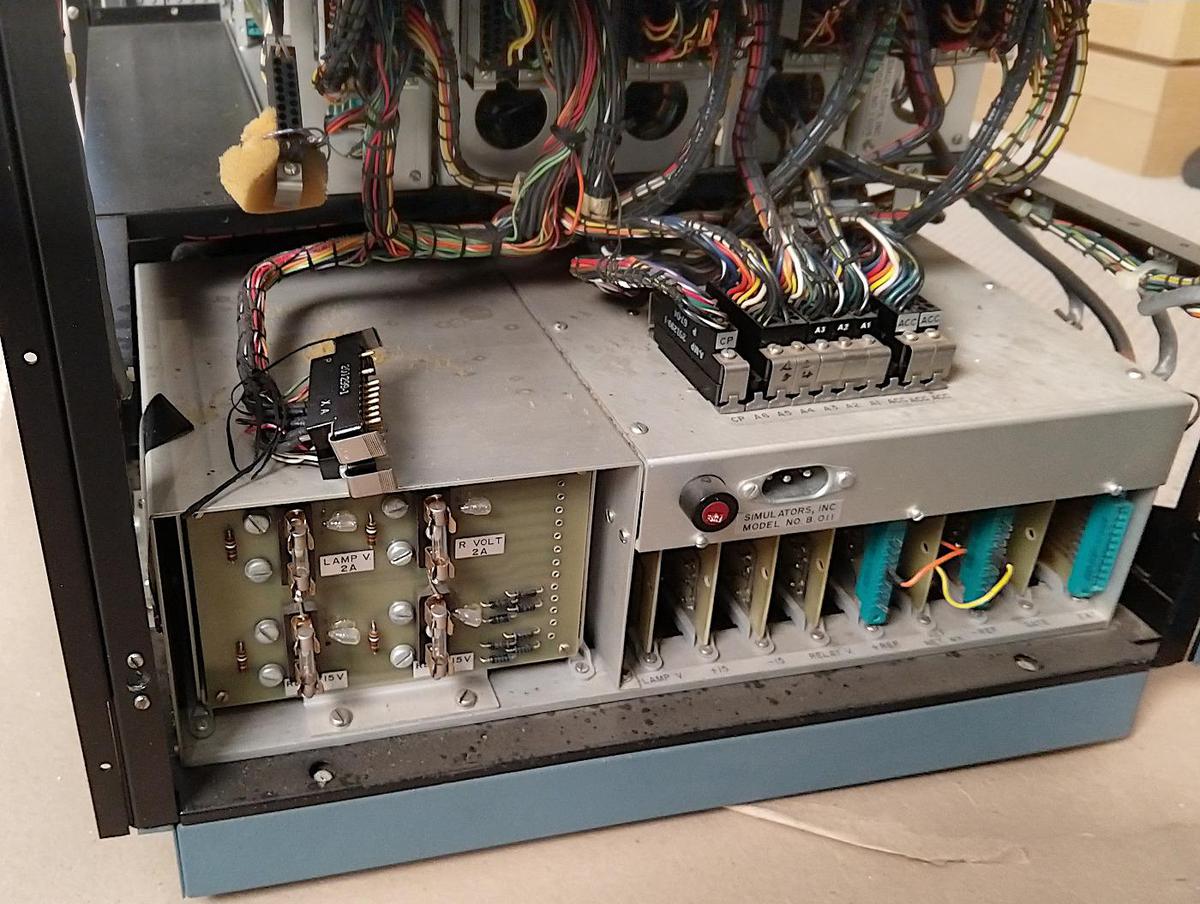

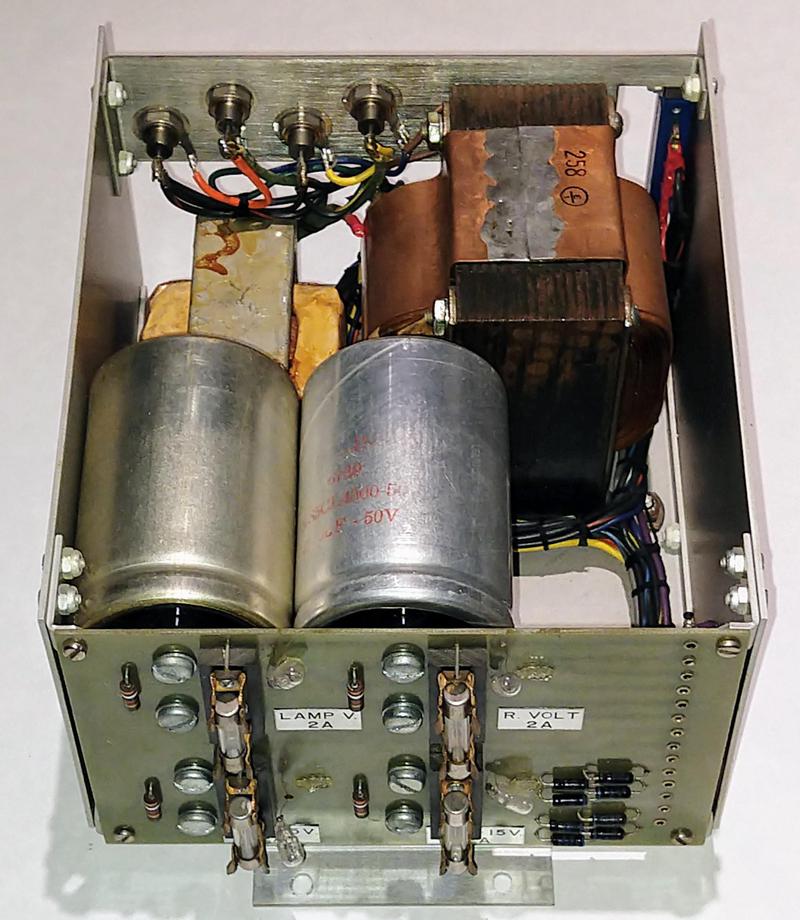

The photo above shows the power supply in the lower back section of the analog computer. The power supply is more complex than I expected. The section on the left converts line-voltage AC into low-voltage AC and DC. These outputs go to the card cage on the right, which has 8 circuit boards that regulate the voltages. The complex wiring harnesses on top of the power supply provide power to the five analog computation modules above the power supply as well as the rest of the computer.

With a vintage computer, it's important to make sure the power supply is working properly, since if it is generating the wrong voltages, the results could be catastrophic. So we proceed methodically, first checking the components in the power supply, then testing the power supply outputs while disconnected from the rest of the computer, and finally powering up the whole computer.

One concern with old power supplies is that the electrolytic capacitors can dry out and fail over time. (These capacitors are the large cylinders above.) We measured the capacitance and resistance of the large capacitors (using Marc's vintage HP LCR meter) and they tested okay. We also checked the input resistance of the power supply to make sure there weren't any obvious shorts; everything seemed fine.

We removed all the cards from the card cage, cautiously plugged in the power supply, and... nothing at all happened. For some reason, no AC voltage was getting to the power supply. The fuse was an obvious suspect, but it was fine. Carl asked about the power switch on the control panel, and we figured out that the switch was connected to the power supply via the socket labeled "CP" (below). We added a jumper, powered up the supply, and this time found the expected DC voltages from the module.

We hooked up the regulator cards using a bench power supply as input to make sure they were working properly. We tweaked the potentiometer on the +15 V regulator to get exactly 15 V output. The -15 V regulator seemed temperamental and the voltage jumped around when we adjusted it. I suspected a dirty potentiometer, but it settled down to a stable output (narrator: this is foreshadowing). We don't know what the lamp and relay voltages are supposed to be, and they're not critical, so we left those boards unadjusted.

The photo above shows one of the regulator cards; you might think it has a lot of components just to regulate a voltage. The first voltage regulator chip was created in 1966, so this computer uses a linear regulator built from individual components instead. The large metal transistor on the heat sink is the heart of the voltage regulator; it acts kind of like a variable resistor to control the output. The rest of the components provide the control signal to this transistor to produce the desired output. A Zener diode (yellow and green stripes on the right) acts as the voltage reference, and the output is compared to this reference. A smaller transistor generates the control signal for the power transistors. In the lower right, a multi-turn potentiometer is used to adjust the voltage output. The larger capacitors (metal cylinders) filter the voltage, while the smaller capacitors ensure stability. Most power supply of just a few years later would replace all of these components (except the filter capacitors) with a voltage regulator IC.

The circuitry of this card is somewhat bizarre, and not what I was expecting on an oscillator card. The left side has three large capacitors and three diodes, powered by low-voltage AC from the transformer. After puzzling over this for a bit, I determined it was a full-wave voltage doubler, producing DC at twice the voltage of the AC input. I assume that the chopper pulses needed to be higher voltage than the computer's +15 volt supply, so they used this voltage doubler to get enough voltage swing.

The oscillator itself (right side of the card), uses one NPN transistor as an oscillator, and another NPN transistor as a buffer. It took me a while to figure out how a single-transistor oscillator works. It turns out to be a phase-shift oscillator; the three white capacitors in the middle of the board shift the signal 180°; inverting it causes oscillation.

Somewhat surprisingly, the op amp cards used in the power supply are exactly the same as the precision op amps used in the analog computer itself. Back in 1969, op amp integrated circuits weren't accurate enough for the analog computer, so the designers of this analog computer combined an op amp chip with a chopper circuit and many other parts to create a high-performance op ap card. I described the op amp cards in detail in the first post, so I won't go into more detail here.

One of the problems with an analog computer is that the results are only as accurate as the components. In other words, if the 10 volt reference is off by 1%, your answers will be off by 1%. The result is that analog computers need expensive, high-precision resistors. (In contrast, the voltages in a digital computer can drift a lot, as long as a 0 and a 1 can be distinguished. This is one reason why digital computers replaced analog computers.) Typical resistors have a tolerance of 20%, which means the resistance can be up to 20% different from the indicated value. More expensive resistors have tolerance of 10%, 5%, or even 1%. But the resistors on this board have tolerance of 0.01%! (These resistors are the pink cylinders.) The two large resistors on the left are 15Ω "Brown Devil" power resistors. They protect the voltage outputs in case someone plugs the wrong wire into the patch panel and shorts an output, which would be easy to do.

The network card receives an adjustment voltage from the control panel, and also has multi-turn potentiometers on the right for adjustment (like the regulator cards). The green connectors are used to connect the network card to the op amp cards. (The op amps have a separate connector for the input, to reduce electrical noise.)

I removed the working +15 regulator and failing -15 regulator from the card cage and tested them on the bench. Conveniently, both boards are identical, so I could easily compare signals on the two boards. (Modern circuits typically use special regulators for negative voltage outputs, but this power supply used the same regulator for both.) The output transistor on the bad board wasn't getting any control signal on its base, so it wasn't producing any output. Tracing the signals back, I found the transistor generating this signal wasn't getting any voltage. This transistor was powered directly from the connector, so why wasn't any voltage getting to the transistor?

I studied the printed circuit board and noticed that there wasn't a PCB trace between the transistor and the connector! Instead, part of the current path was through the heat sink. The heat sink was screwed down to the PCB, making a connection between the two red arrows above. After I tightened all the screws, the board worked fine.

We put the boards back in, powered up the chassis, and this time the voltages all seemed to be correct. The op amp overload warning lights remained off; the warning light went on before because the op amps couldn't operate with one voltage missing. The next step is to power up the analog circuitry modules and test them. We also need to repair the separate 5-volt power supply used by the digital logic since we found some bad capacitors that will need to be replaced. So those are tasks for the next sessions.

Follow me on Twitter @kenshirriff to stay informed of future articles. I also have an RSS feed.

The Model 240 analog computer from Simulators Inc. was a "precision general purpose analog computer" for the desk top, with up to 24 op amps. (This one has 20 op amps.)

Analog computers used to be popular for fast scientific computation, especially differential equations, but pretty much died out in the 1970s as digital computers became more powerful. They were typically programmed by plugging cables into a patch panel, yielding a spaghetti-like tangle of wires. In the photo above, the colorful patch panel is in the middle. Above the patch panel, 18 potentiometers set voltage levels to input different parameters. A smaller patch panel for the digital logic is in the upper right.

The power supply

The computer uses two reference voltages: +10 V and -10 V, which the power supply must generate with high accuracy. (Older, tube-based analog computers typically used +/- 100 V references.) The power supply also provides regulated +/- 15 V to power the op amps, power for the various relays in the computer, and power for the lamps.

The power supply in the bottom section of the analog computer. The transformer/rectifier section is on the left and the regulator card cage is on the right. Wiring harnesses on top of the power supply connect it to the rest of the computer.

The photo above shows the power supply in the lower back section of the analog computer. The power supply is more complex than I expected. The section on the left converts line-voltage AC into low-voltage AC and DC. These outputs go to the card cage on the right, which has 8 circuit boards that regulate the voltages. The complex wiring harnesses on top of the power supply provide power to the five analog computation modules above the power supply as well as the rest of the computer.

With a vintage computer, it's important to make sure the power supply is working properly, since if it is generating the wrong voltages, the results could be catastrophic. So we proceed methodically, first checking the components in the power supply, then testing the power supply outputs while disconnected from the rest of the computer, and finally powering up the whole computer.

The transformer / rectifier section

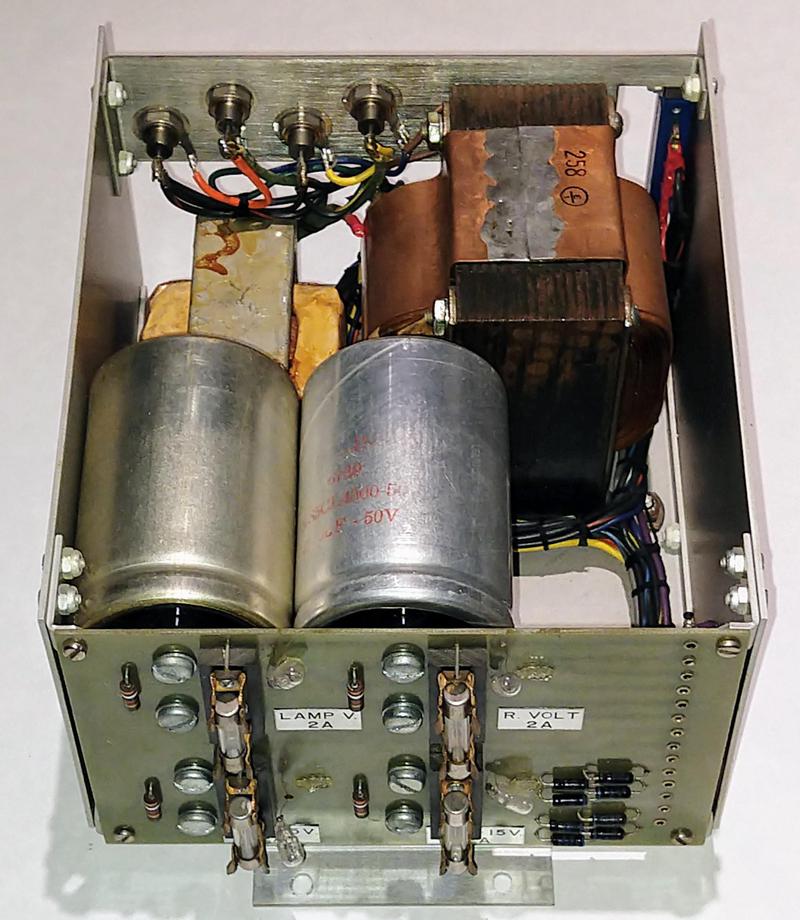

We started by removing the power supply from the computer, and disconnecting the two halves. The left half of the power supply (below) produces four unregulated DC outputs and a low-voltage AC output. In contains two large power transformers, four large filter capacitors, stud rectifiers (upper back), smaller diodes (front right), and fuses. This is a large and very heavy module because of the transformers.2 The smaller transformer powers the lamps and relays, while the larger transformer powers the +15 and -15 volt supplies as well as the oscillator. Presumably, using separate transformers prevents noise and fluctuations from the lamps and relays from affecting the precision reference supplies.

This section of the power supply reduces the line-voltage AC to low-voltage DC and AC.

One concern with old power supplies is that the electrolytic capacitors can dry out and fail over time. (These capacitors are the large cylinders above.) We measured the capacitance and resistance of the large capacitors (using Marc's vintage HP LCR meter) and they tested okay. We also checked the input resistance of the power supply to make sure there weren't any obvious shorts; everything seemed fine.

We removed all the cards from the card cage, cautiously plugged in the power supply, and... nothing at all happened. For some reason, no AC voltage was getting to the power supply. The fuse was an obvious suspect, but it was fine. Carl asked about the power switch on the control panel, and we figured out that the switch was connected to the power supply via the socket labeled "CP" (below). We added a jumper, powered up the supply, and this time found the expected DC voltages from the module.

The side of the power supply has three twist-lock AC sockets labeled "FAN", "DVM-LOGIC", and "CP" (control panel). The "DVM-LOGIC" socket powers a 5-volt supply for the digital logic, which we still need to repair.

The regulator cards

Next, we tested the power supply's various cards individually. The power supply has four regulator cards generating "lamp voltage", "+15", "-15", and "relay voltage". The purpose of a regulator card is to take an unregulated DC voltage from the transformer module and reduce it to the desired output voltage.We hooked up the regulator cards using a bench power supply as input to make sure they were working properly. We tweaked the potentiometer on the +15 V regulator to get exactly 15 V output. The -15 V regulator seemed temperamental and the voltage jumped around when we adjusted it. I suspected a dirty potentiometer, but it settled down to a stable output (narrator: this is foreshadowing). We don't know what the lamp and relay voltages are supposed to be, and they're not critical, so we left those boards unadjusted.

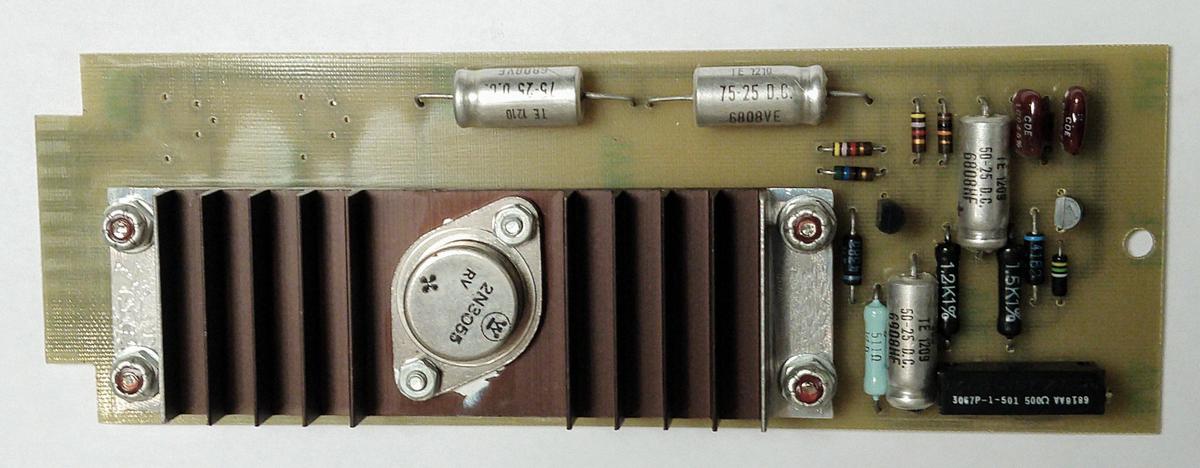

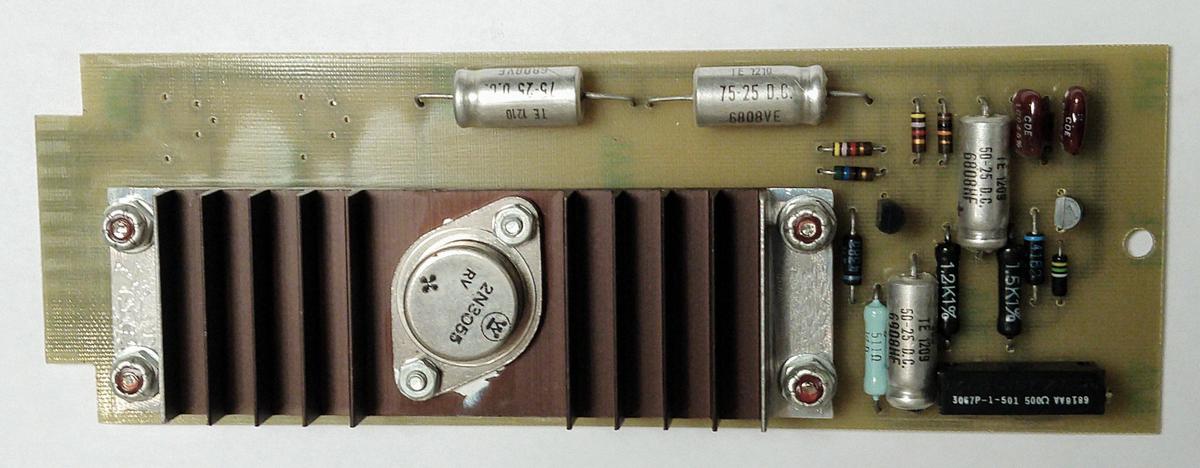

One of the voltage regulator cards. A large power transistor is attached to the heat sink.

The photo above shows one of the regulator cards; you might think it has a lot of components just to regulate a voltage. The first voltage regulator chip was created in 1966, so this computer uses a linear regulator built from individual components instead. The large metal transistor on the heat sink is the heart of the voltage regulator; it acts kind of like a variable resistor to control the output. The rest of the components provide the control signal to this transistor to produce the desired output. A Zener diode (yellow and green stripes on the right) acts as the voltage reference, and the output is compared to this reference. A smaller transistor generates the control signal for the power transistors. In the lower right, a multi-turn potentiometer is used to adjust the voltage output. The larger capacitors (metal cylinders) filter the voltage, while the smaller capacitors ensure stability. Most power supply of just a few years later would replace all of these components (except the filter capacitors) with a voltage regulator IC.

The chopper oscillator

The precision op amps in the analog computer use a chopper circuit for better DC performance, and the chopper requires 400 Hertz pulses. These pulses are generated by the oscillator board in the power supply (called the gate for some reason). We powered up the board separately to test it, and found it produced 370 Hz, which seemed close enough.

The gate card provides 400 Hertz oscillations to control the op amp choppers.

The circuitry of this card is somewhat bizarre, and not what I was expecting on an oscillator card. The left side has three large capacitors and three diodes, powered by low-voltage AC from the transformer. After puzzling over this for a bit, I determined it was a full-wave voltage doubler, producing DC at twice the voltage of the AC input. I assume that the chopper pulses needed to be higher voltage than the computer's +15 volt supply, so they used this voltage doubler to get enough voltage swing.

The oscillator itself (right side of the card), uses one NPN transistor as an oscillator, and another NPN transistor as a buffer. It took me a while to figure out how a single-transistor oscillator works. It turns out to be a phase-shift oscillator; the three white capacitors in the middle of the board shift the signal 180°; inverting it causes oscillation.

The op amps

Calculations in the analog computer are referenced to +10 volt and -10 volt reference voltages, so these voltages need to be very accurate. The regulator cards produce fairly stable voltages, but not good enough. (While testing the regulator cards, I noticed that the output voltage shifted noticeably as I changed the input voltage.) To achieve this accuracy, the reference voltages are generated by op amp circuits, built from two op amp boards and a feedback network card.

An op amp card. This card has a single input on the right. It uses a round metal-can op amp IC, but the chopper circuitry improves performance.

Somewhat surprisingly, the op amp cards used in the power supply are exactly the same as the precision op amps used in the analog computer itself. Back in 1969, op amp integrated circuits weren't accurate enough for the analog computer, so the designers of this analog computer combined an op amp chip with a chopper circuit and many other parts to create a high-performance op ap card. I described the op amp cards in detail in the first post, so I won't go into more detail here.

The network card

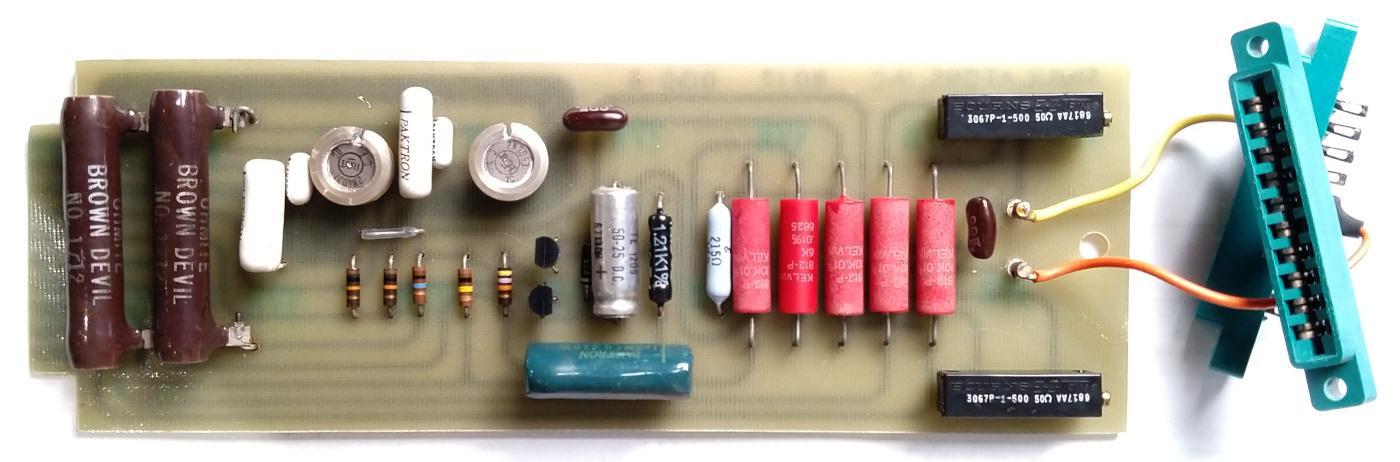

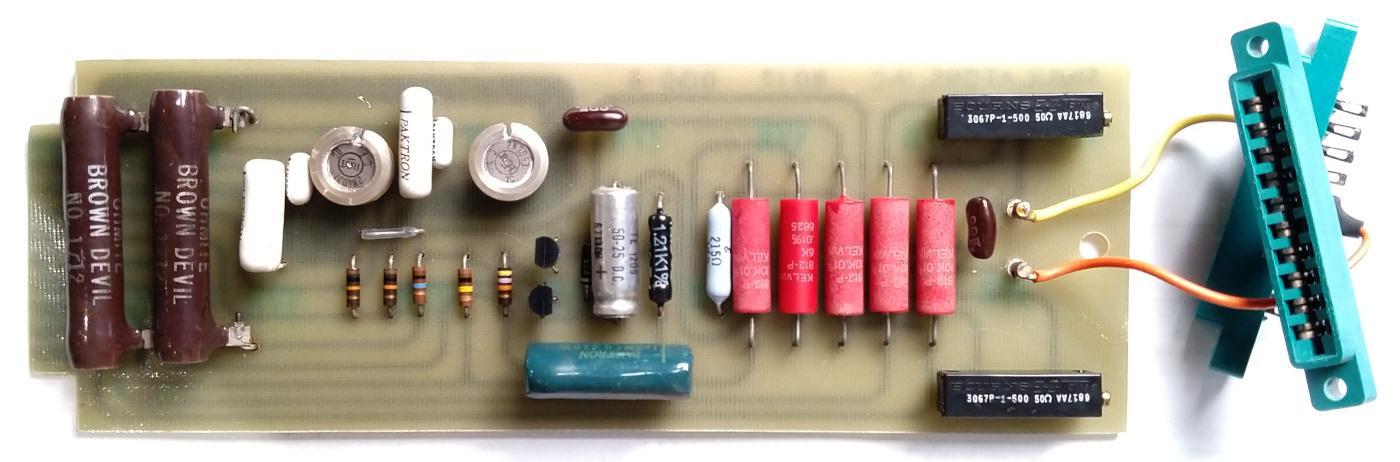

The network card has two jobs. First, it has precision resistors to create the feedback networks for the power supply op amps. Second, it has two power transistors (circular metal components below) that buffer the reference voltages from the op amp for use by the rest of the computer.

The network card. The two connectors on the left are attached to the op amp inputs.

One of the problems with an analog computer is that the results are only as accurate as the components. In other words, if the 10 volt reference is off by 1%, your answers will be off by 1%. The result is that analog computers need expensive, high-precision resistors. (In contrast, the voltages in a digital computer can drift a lot, as long as a 0 and a 1 can be distinguished. This is one reason why digital computers replaced analog computers.) Typical resistors have a tolerance of 20%, which means the resistance can be up to 20% different from the indicated value. More expensive resistors have tolerance of 10%, 5%, or even 1%. But the resistors on this board have tolerance of 0.01%! (These resistors are the pink cylinders.) The two large resistors on the left are 15Ω "Brown Devil" power resistors. They protect the voltage outputs in case someone plugs the wrong wire into the patch panel and shorts an output, which would be easy to do.

The network card receives an adjustment voltage from the control panel, and also has multi-turn potentiometers on the right for adjustment (like the regulator cards). The green connectors are used to connect the network card to the op amp cards. (The op amps have a separate connector for the input, to reduce electrical noise.)

Powering it up and fixing a problem

Finally, we put all the power supply boards back in the cabinet, put the power supply back in the computer, and powered up the chassis (but not the analog computer modules). Some of the indicator lights on the control panel lit up and the +15 V supply showed up on the meter. However, the -15 V supply wasn't giving any voltage, and the op amp overload lights were illuminated on the front panel, and the reference voltages from the op amps weren't there. The bad -15 V supply looked like the first thing to investigate, since without it, the op amp boards wouldn't work.I removed the working +15 regulator and failing -15 regulator from the card cage and tested them on the bench. Conveniently, both boards are identical, so I could easily compare signals on the two boards. (Modern circuits typically use special regulators for negative voltage outputs, but this power supply used the same regulator for both.) The output transistor on the bad board wasn't getting any control signal on its base, so it wasn't producing any output. Tracing the signals back, I found the transistor generating this signal wasn't getting any voltage. This transistor was powered directly from the connector, so why wasn't any voltage getting to the transistor?

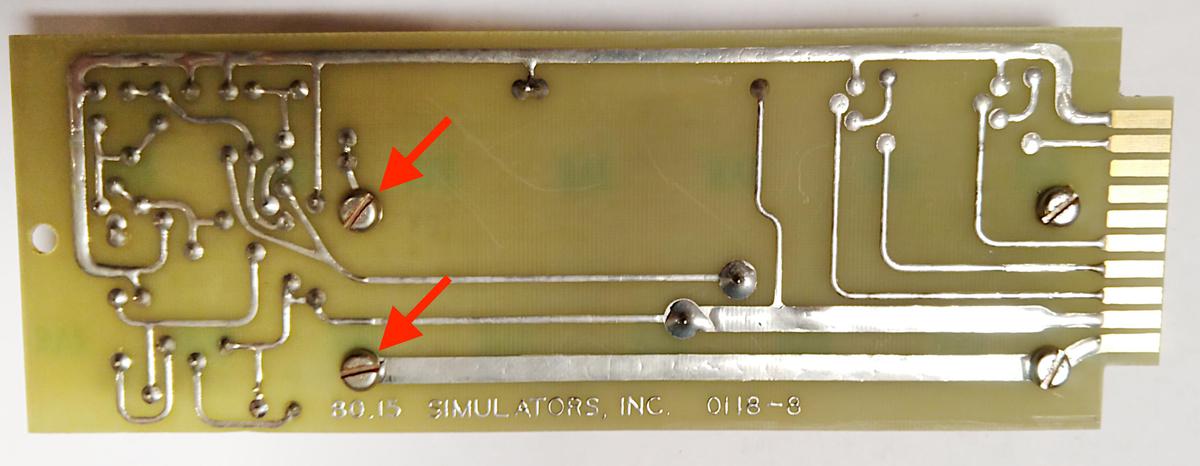

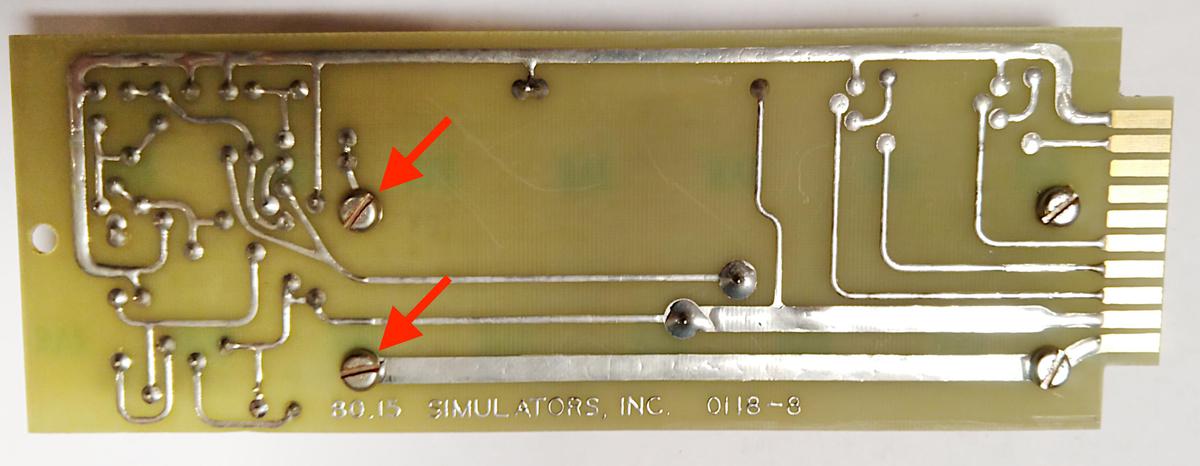

A regulator board was failing due to loose screws (red arrows). The circuit was powered through the thick bottom PCB trace and then current passed through the heat sink from the lower screw to the upper screw.

I studied the printed circuit board and noticed that there wasn't a PCB trace between the transistor and the connector! Instead, part of the current path was through the heat sink. The heat sink was screwed down to the PCB, making a connection between the two red arrows above. After I tightened all the screws, the board worked fine.

The analog computer with the plugboard and sides removed to show the internal circuitry. The power supply is in the lower back section. One module has been removed and placed in front of the computer.

We put the boards back in, powered up the chassis, and this time the voltages all seemed to be correct. The op amp overload warning lights remained off; the warning light went on before because the op amps couldn't operate with one voltage missing. The next step is to power up the analog circuitry modules and test them. We also need to repair the separate 5-volt power supply used by the digital logic since we found some bad capacitors that will need to be replaced. So those are tasks for the next sessions.

Follow me on Twitter @kenshirriff to stay informed of future articles. I also have an RSS feed.

Notes and references

- The computer's integrated circuits have 1968 and 1969 date codes on them, so I think the computer was manufactured in 1969. ↩

- Most modern power supplies are switching power supplies, so they are much smaller and lighter than linear power supplies like the one in the analog computer. (Your laptop charger, for instance, is a switching power supply.) Back in this era, switching power supplies were fairly exotic. However, linear power supplies are still sometimes used since they have less noise than switching power supplies. ↩

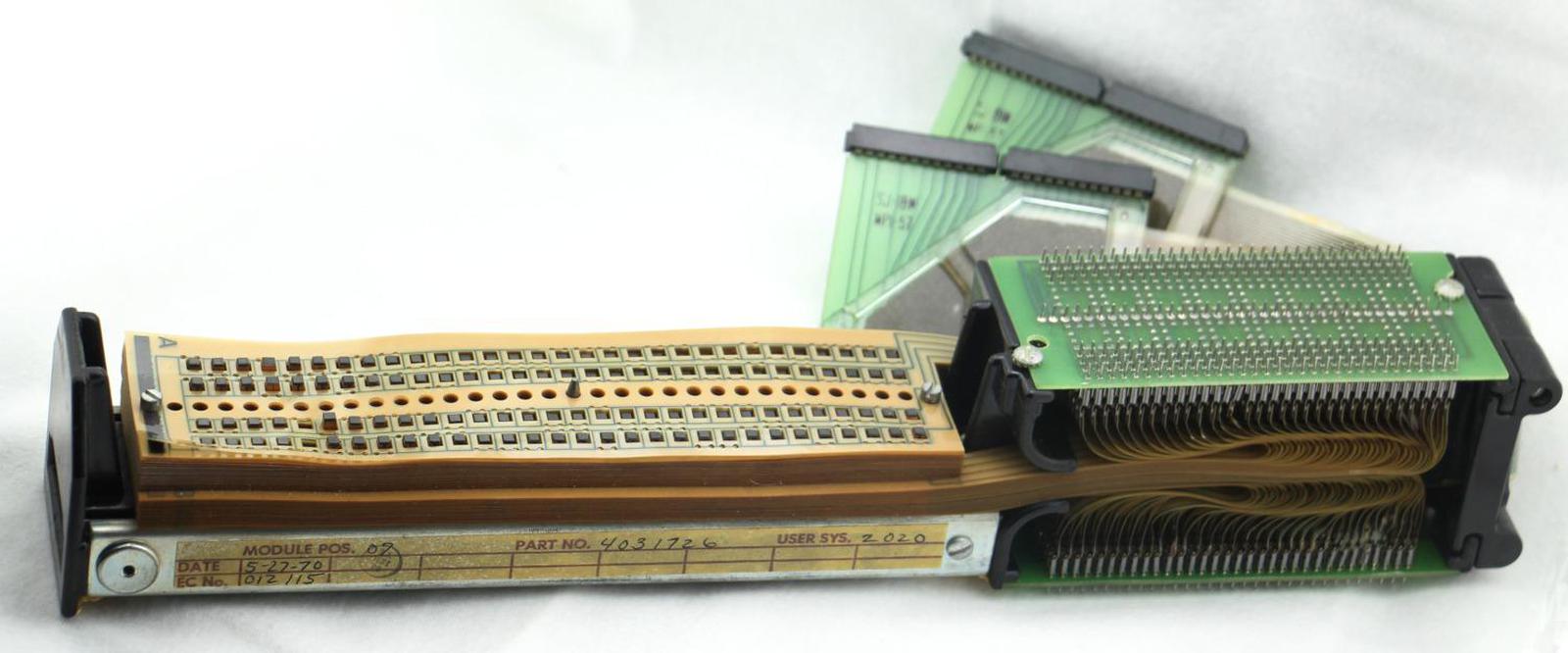

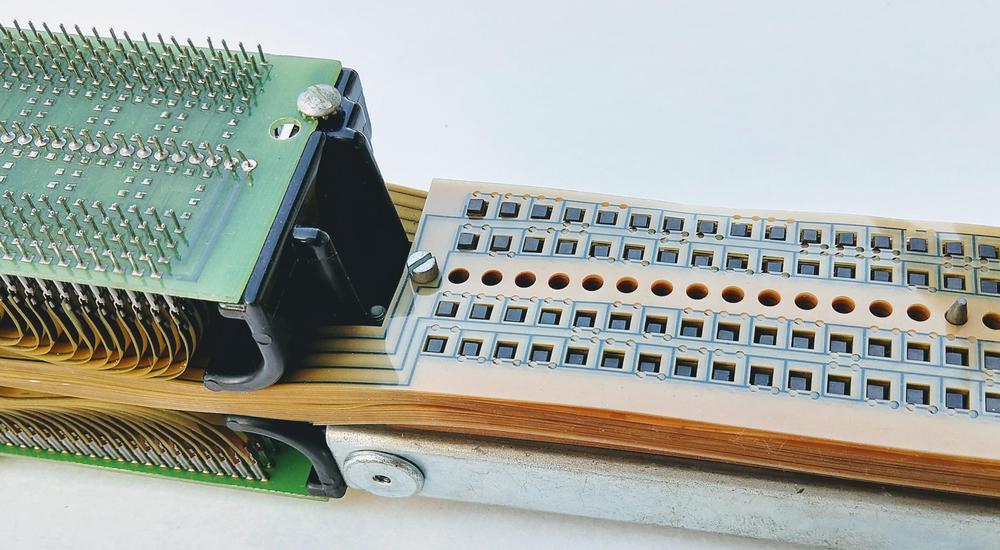

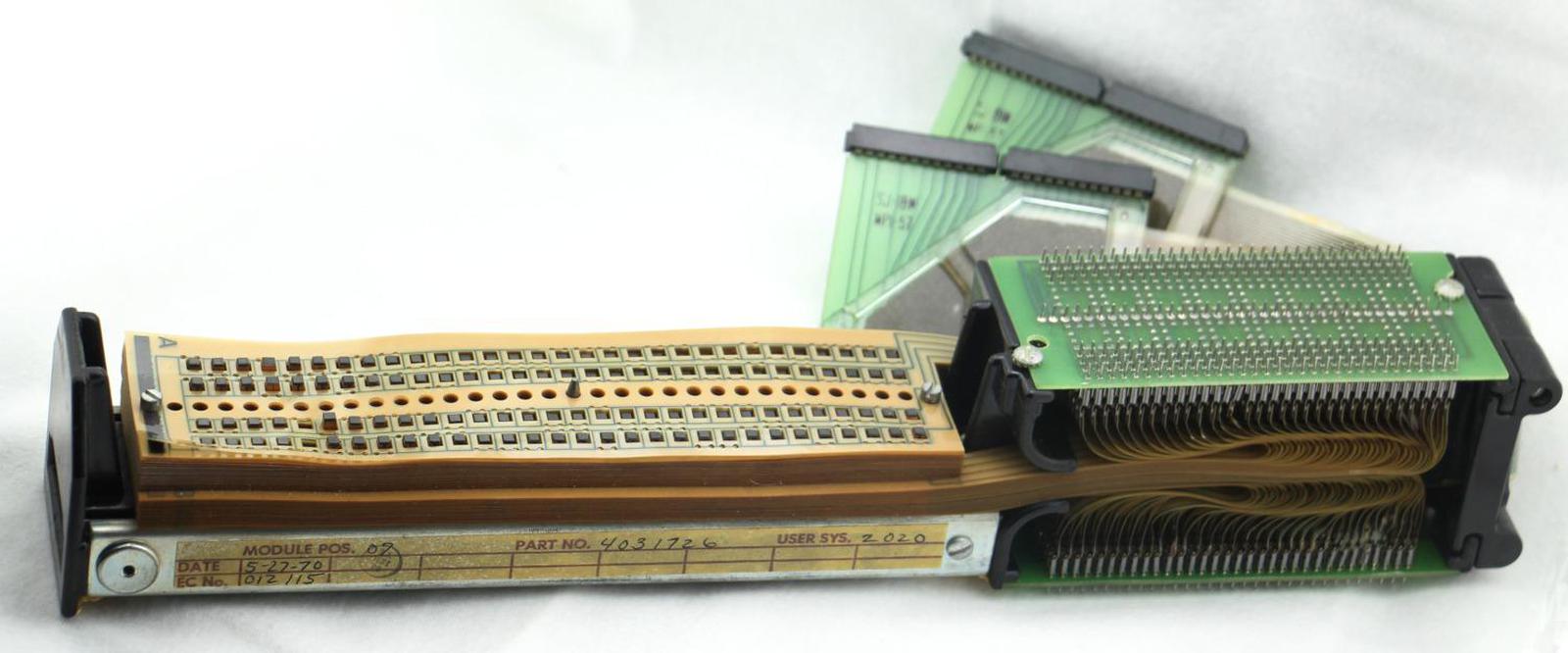

TROS: How IBM mainframes stored microcode in transformers

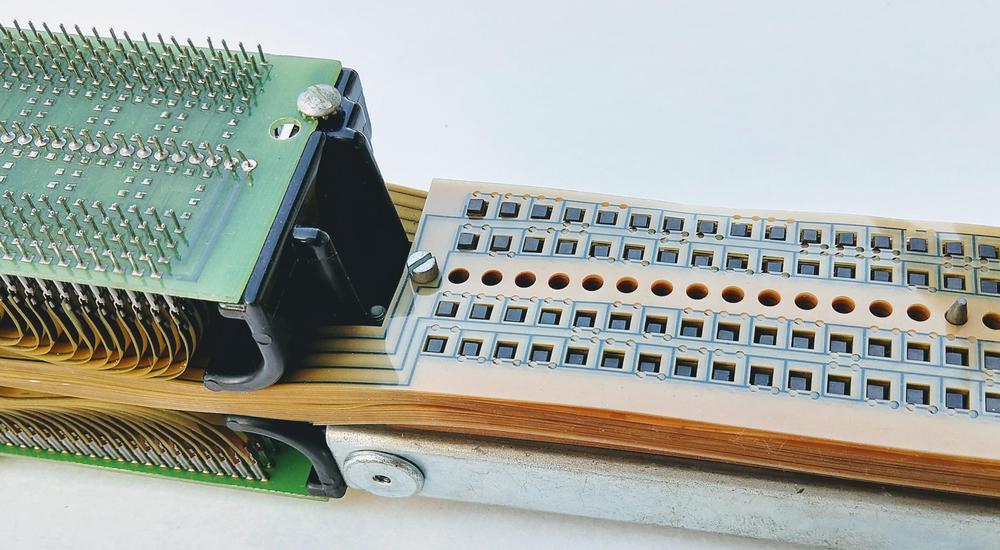

I recently came across a Transformer Read-Only Storage (TROS) module that stored microcode in an IBM System/360 mainframe computer. This unusual storage mechanism used a stack of Mylar sheets to hold 15,360 bits, equivalent to 1920 bytes. By modern standards, this is an absurdly small amount of data, but in 19641, semiconductor read-only memory chips weren't available, so using Mylar sheets for storage was a reasonable solution. In this blog post, I explain how the TROS module worked and its role in the success of the IBM System/360.

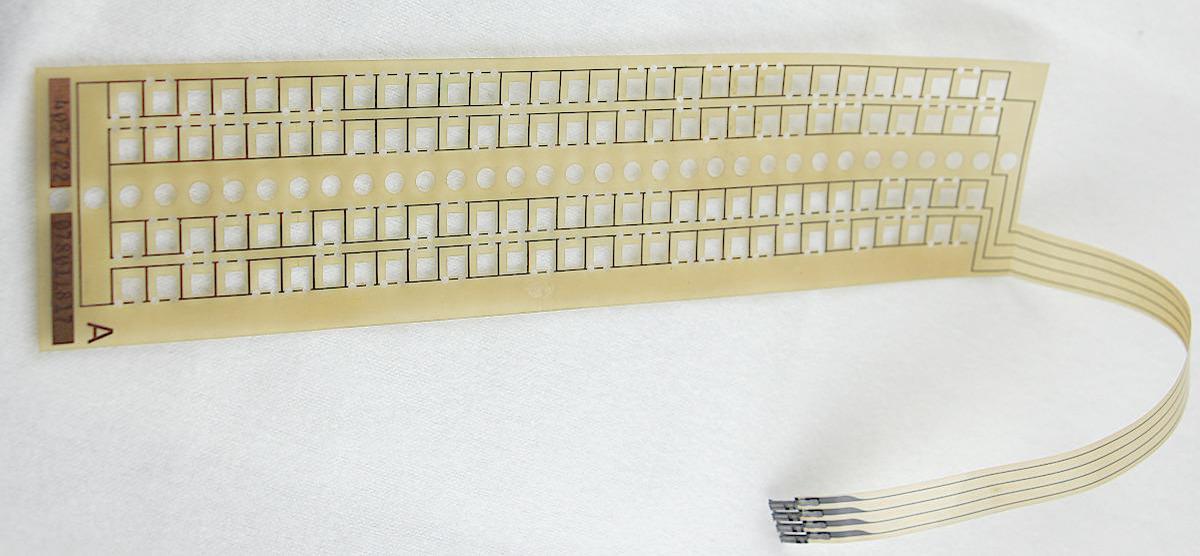

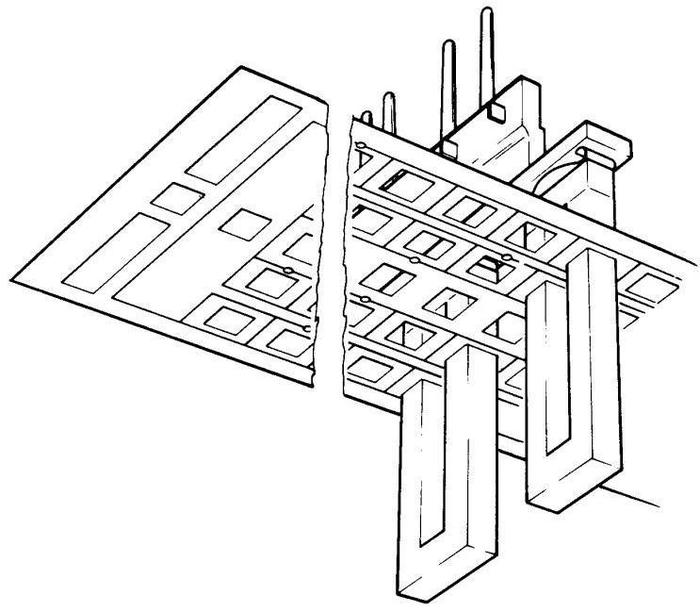

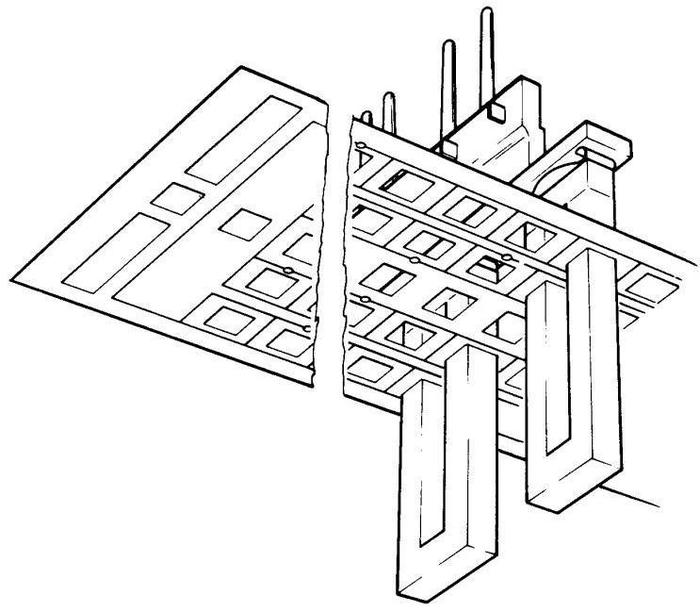

The actual TROS module has 60 transformers and 256 drive lines, so it held 256 words of 60 bits. Physically threading 256 wires through transformers would be difficult, so the TROS module used a clever technique to make the wiring easy to assemble or modify. The wiring was printed on sheets of Mylar (called tapes), essentially a flexible printed circuit board. Each tape had two loops of wiring (called word lines) that either went through or around the transformers, so 128 Mylar tapes provided the wiring for 256 words.

The Mylar tapes were stacked on the 60 transformers as shown below. Each of the 60 transformers consisted of a U-shape with both arms passing through the stack of 128 tapes. In this way, the Mylar tapes efficiently created the wiring through and around the transformers, rather than threading individual wires.

Once the stack was complete, an I-bar was placed on top of each U to close the transformer core. A sense line (the reddish wiring below) twas wrapped many times around each I-bar to detect the output signal. Each sense line was connected to a sense amplifier that detected the output signal, to produce the 60-bit output. (The I-bars and sense lines are missing from the TROS module I have but are visible in the module below.)

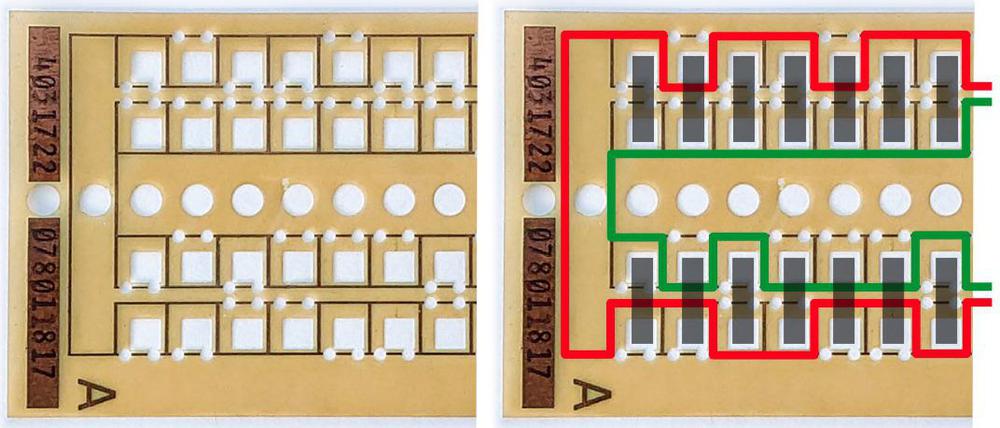

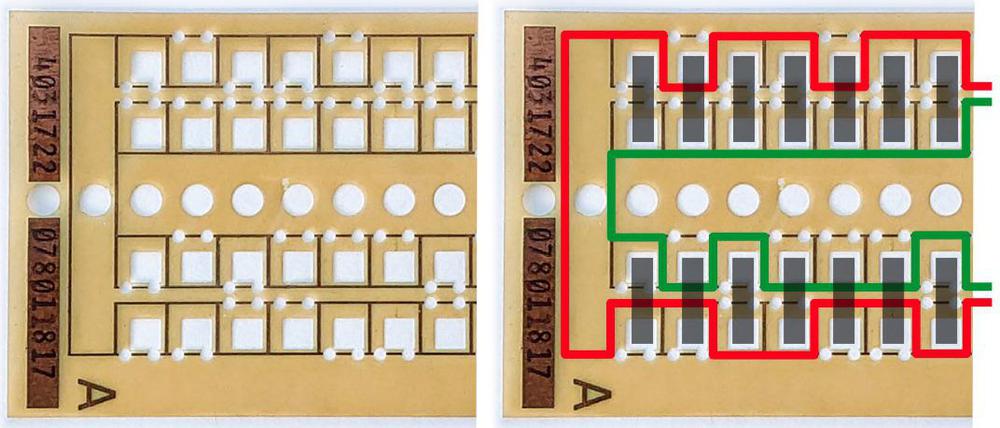

The Mylar tapes were programmed by punching holes through wires to break the undesired wiring paths. The photo below shows a closeup of one of the tapes, showing the wiring printed on the tape, the large square holes for the transformer legs, and the small round holes punched through the word line wiring. The diagram on the right illustrates the wiring path resulting from the hole pattern. Each tape has two word lines (indicated in red and green) that go either through or around each transformer (gray rectangle).

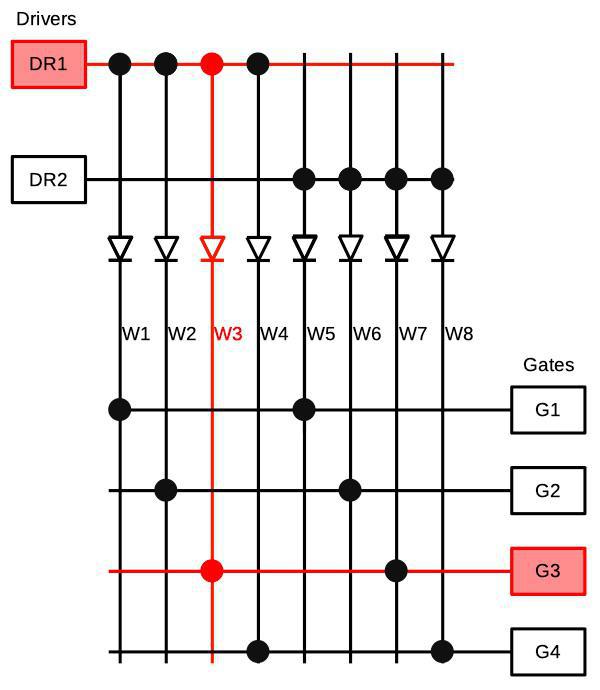

To read one of the 256 words, one word line (wire loop) on one particular Mylar tape received a current pulse. The straightforward implementation would use 256 pulse drivers, with one selected by the address bits, butthis much hardware would be expensive. Instead, the TROS module is driven by a "matrix" approach. The 256 word lines are wired logically into a 16×16 matrix. The address is split in half, and each half is decoded to select one of 16 lines. The word line that is selected on both ends line will receive a current pulse and be activated.2

Each Mylar tape is connected to one of two diode boards, resulting in hundreds of connections (above). (These diodes prevent the matrixed signals from all shorting together.) The diodes are inside the square aluminum modules below. The IBM System/360 didn't use integrated circuits, but instead used SLT modules, hybrid modules containing tiny semiconductors and thick film resistors. The SLT modules below each contain 8 diodes.

The TROS module I have was used on the low-end System/360 Model 20 computer, according to the label on it. The Model 20 was a slow, stripped-down system, lacking the full System/360 instruction set. Even so, its low cost ($1280 per month) made it the most popular System/360 model. The Model 20 contained 8 TROS modules, holding 6144 micro-instructions (3 micro-instructions per 60-bit word).3 These modules are visible on the left side of the computer below, mounted vertically. Note that the TROS modules take up a lot of space inside the computer.

In case you're wondering what the Model 20 microcode looks like, a sample is below. The microcode itself (in hex) is highlighted in blue, with the mnemonic expansion in green. Comments are on the right. The Model 20's microcode is much simpler than the horizontal microcode in larger System/360 systems.4

However, in the 1950s, storage technologies weren't fast and inexpensive enough to make microcode practical. It wasn't until the IBM System/360 (1964) that commercial computers made significant use of microcode. Microcode played a key role in the success of the System/360, helping IBM produce a line of computers with the same instruction set architecture but widely different implementations. Microcode also simplified backward compatibility, helping the System/360 support instruction sets of older IBM systems.5

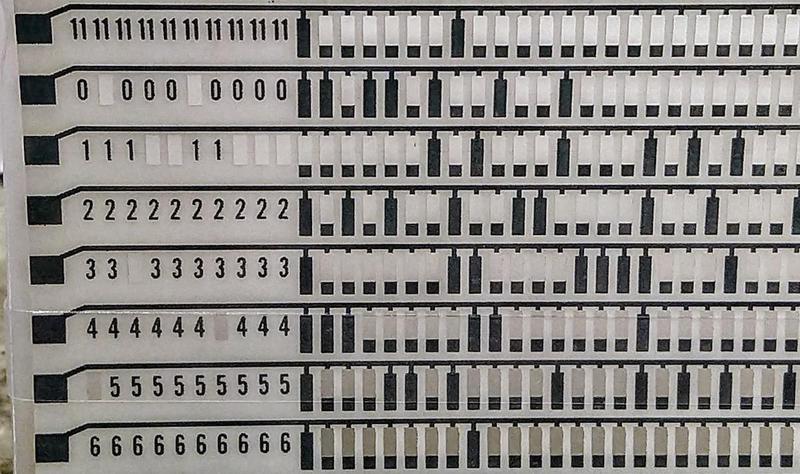

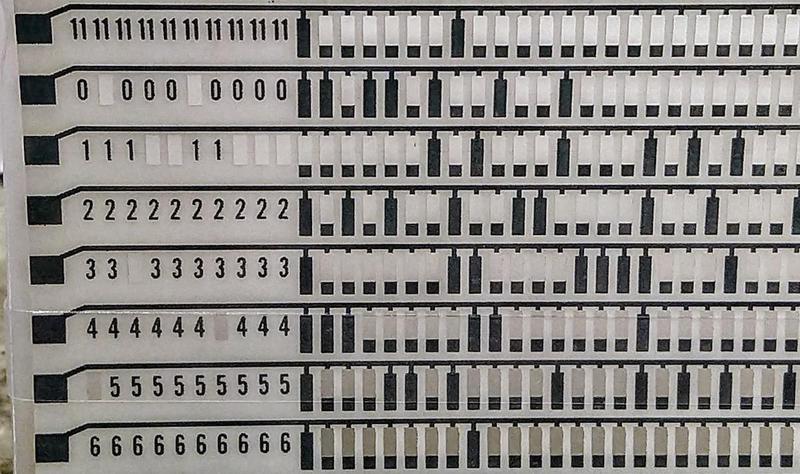

A competing type of read-only storage is CCROS (Capacitive Coupled Read-Only Storage), which used Mylar sheets that functioned as a matrix of capacitors. An interesting feature of CCROS is that the Mylar sheets had the same size as an IBM punch card so microcode could be programmed by punching holes in it with a standard keypunch. CCROS was developed at IBM's Endicott site. Because the System/360 Model 30 was developed there too, it used the locally-developed CCROS even though CCROS was slower and less reliable than TROS. Each CCROS card holds 12 60-bit words. The Model 30 had 42 CCROS boards, each holding 8 cards, for a total of 4032 60-bit words.

The high-performance Models 50, 65 and 67 required a faster control store, so they used a third technology, BCROS (Balanced Capacitor Read-Only Storage). Like CCROS, BCROS read bits by sensing capacitance, but BCROS used two capacitors for each bit (the Balanced Capacitors), which helped reduce noise and increased speed. The Mylar sheets for BCROS were 20″×8½″, much larger than the TROS and CCROS sheets. The data in BCROS was etched into the copper wiring (below), rather than by punching holes. Each bit is represented by two squares: one connected to the upper wire and one connected to the lower wire (or vice versa), forming the balanced capacitors. Each sheet plane held 176 words of 100 bits, and the system used 16 sheets to provide 2816 words.

Instead of using special technology to store microcode, the low-end Model 25 held microcode in a 16-kilobyte section of core memory called Control Storage. In this model, different microcode was loaded from a card deck or tape to switch operating modes between System/360 and emulation of the legacy IBM 1400 series.

An important feature of these storage technologies is that the microcode could be easily updated at customer sites, by swapping the Mylar sheets (or card deck) holding the microcode. Many system bugs could be fixed inexpensively by changing the microcode. (In comparison, an "engineering change" on the older IBM 1401 typically required the engineer to modify wiring on the backplane, much more time-consuming and error-prone.) Microcode could also be upgraded if the customer purchased a new feature.

TROS and core rope are structurally the opposite, reversing the roles of word (address) lines and sense lines. TROS data depended on which word lines went through or around the transformer, while core rope data depended on which sense lines went through or around a core. To read a word in the AGC, one core was activated, while in TROS all of the transformers were (potentially) activated. Each transformer in TROS had one sense line and was associated with one output bit. In contrast, each core in the AGC's core rope had 192 sense lines and was associated with 12 words. (I've written more on core rope here).

I announce my latest blog posts on Twitter, so follow me @kenshirriff for future articles. I also have an RSS feed.

A TROS module, about 15" (39 cm) long. On the left, 60 transformers pass through the stack of 128 Mylar sheets. (Only the square ends of the transformers are visible.) The sheets are connected to the diode boards on the right. The TROS module is connected to the rest of the computer through the connector cables at the back.

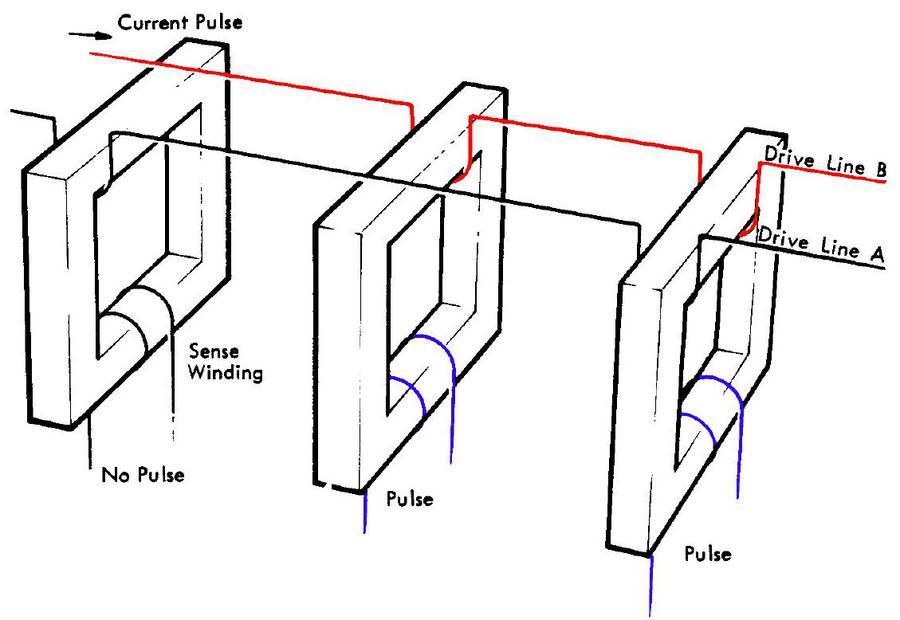

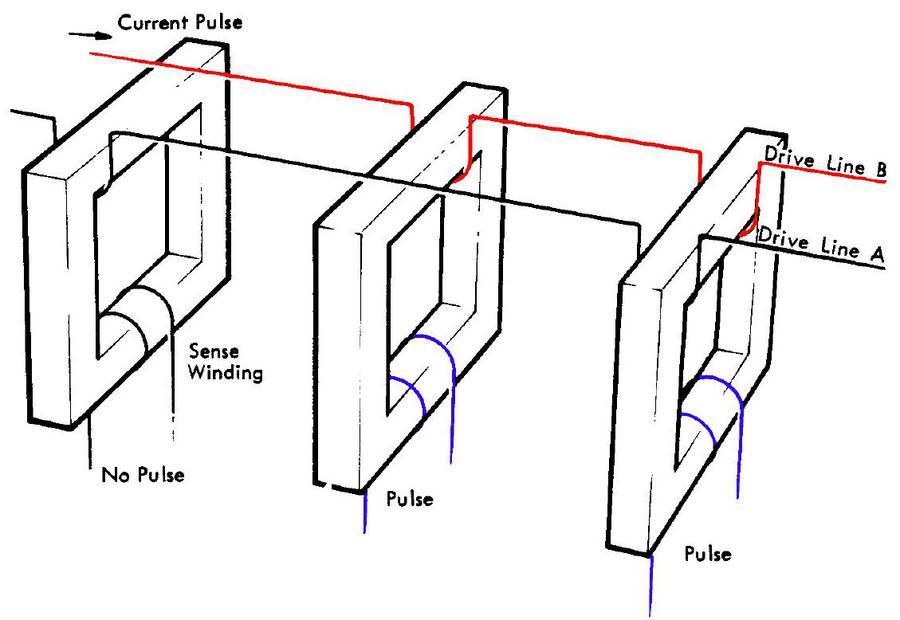

How TROS worked: transformers and current pulses

The diagram below shows the concept behind TROS, simplified to two words of three bits each. The three transformers (square rings) each have a sense winding that generates one bit of output. Each word (A or B) has a drive line that passes either through a transformer (for a 1 bit) or around a transformer (for a 0 bit). In the diagram, drive line B (red) is activated by a current pulse. It generates a pulse (blue) from the second and third transformers, generating the bits 011 for Word B. The wiring for Word A, on the other hand, generates the bits 101. Storing more words is accomplished by threading more drive lines through (or around) the transformers, one for each word. Any bit pattern can be stored, depending on how the drive line is wired.

Simplified diagram of TROS storage. Based on Model 40 Functional Units.

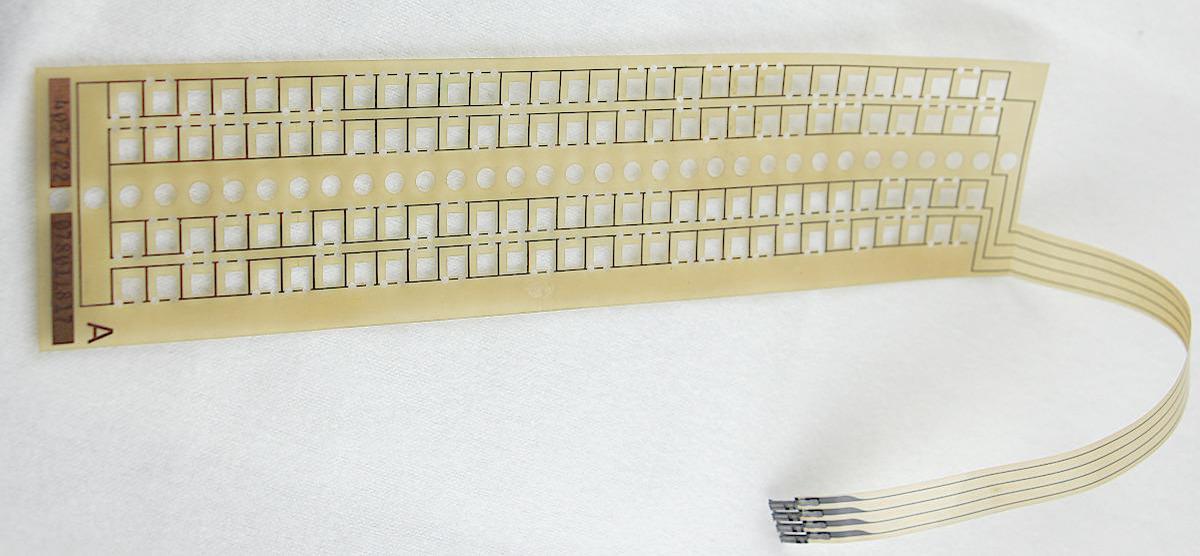

The actual TROS module has 60 transformers and 256 drive lines, so it held 256 words of 60 bits. Physically threading 256 wires through transformers would be difficult, so the TROS module used a clever technique to make the wiring easy to assemble or modify. The wiring was printed on sheets of Mylar (called tapes), essentially a flexible printed circuit board. Each tape had two loops of wiring (called word lines) that either went through or around the transformers, so 128 Mylar tapes provided the wiring for 256 words.

A Mylar tape, holding 120 bits of data. It consists of two wire loops, connected to the four pins at the bottom.

The Mylar tapes were stacked on the 60 transformers as shown below. Each of the 60 transformers consisted of a U-shape with both arms passing through the stack of 128 tapes. In this way, the Mylar tapes efficiently created the wiring through and around the transformers, rather than threading individual wires.

Structure of the transformers, viewed from underneath. Each transformer consists of a U-piece that goes through the tapes, and an I-bar that completes the transformer. From Model 40 Functional Units.

Once the stack was complete, an I-bar was placed on top of each U to close the transformer core. A sense line (the reddish wiring below) twas wrapped many times around each I-bar to detect the output signal. Each sense line was connected to a sense amplifier that detected the output signal, to produce the 60-bit output. (The I-bars and sense lines are missing from the TROS module I have but are visible in the module below.)

The sense windings are wrapped around the I-bars and connected to pins. The I-bars at the bottom are removed, showing the tops of the transformer U-pieces sticking up through the Mylar tapes. This TROS module is in the Computer History Museum.

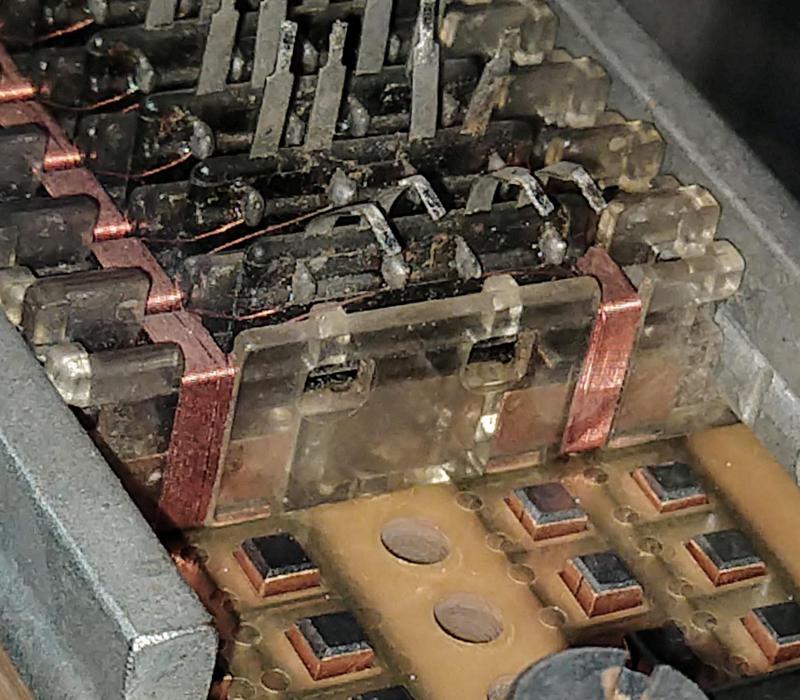

The Mylar tapes were programmed by punching holes through wires to break the undesired wiring paths. The photo below shows a closeup of one of the tapes, showing the wiring printed on the tape, the large square holes for the transformer legs, and the small round holes punched through the word line wiring. The diagram on the right illustrates the wiring path resulting from the hole pattern. Each tape has two word lines (indicated in red and green) that go either through or around each transformer (gray rectangle).

Closeup of a TROS tape. The diagram on the right illustrates how the two traces (red and green) go through or around the transformers (gray rectangles), based on the holes punched in the tape.

To read one of the 256 words, one word line (wire loop) on one particular Mylar tape received a current pulse. The straightforward implementation would use 256 pulse drivers, with one selected by the address bits, butthis much hardware would be expensive. Instead, the TROS module is driven by a "matrix" approach. The 256 word lines are wired logically into a 16×16 matrix. The address is split in half, and each half is decoded to select one of 16 lines. The word line that is selected on both ends line will receive a current pulse and be activated.2

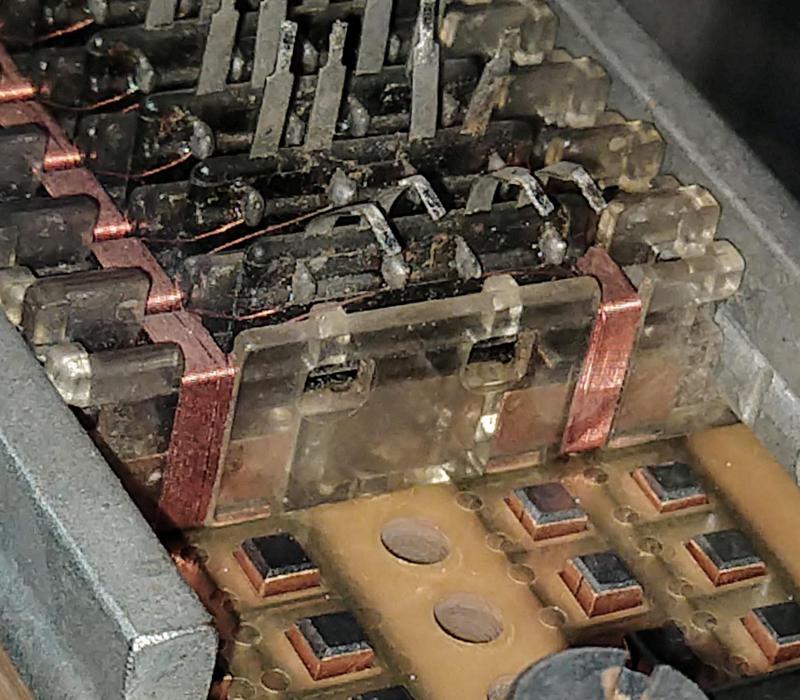

Each Mylar tape is plugged into a diode board. Note the "2020" on the left, indicating that this module is from a System/360 Model 20.

Each Mylar tape is connected to one of two diode boards, resulting in hundreds of connections (above). (These diodes prevent the matrixed signals from all shorting together.) The diodes are inside the square aluminum modules below. The IBM System/360 didn't use integrated circuits, but instead used SLT modules, hybrid modules containing tiny semiconductors and thick film resistors. The SLT modules below each contain 8 diodes.

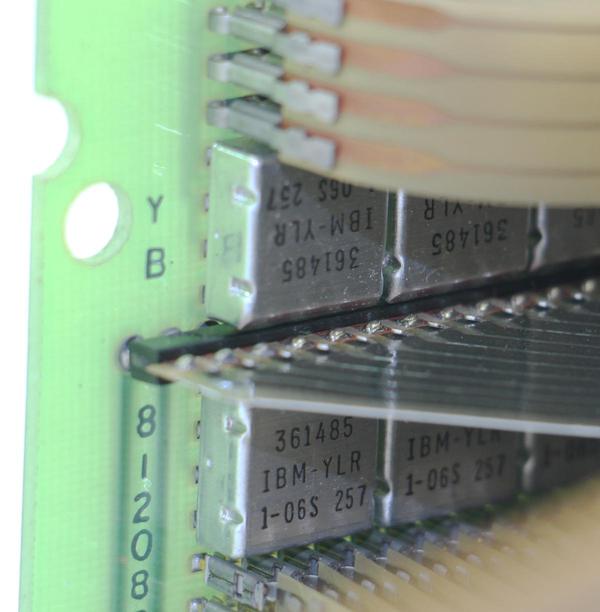

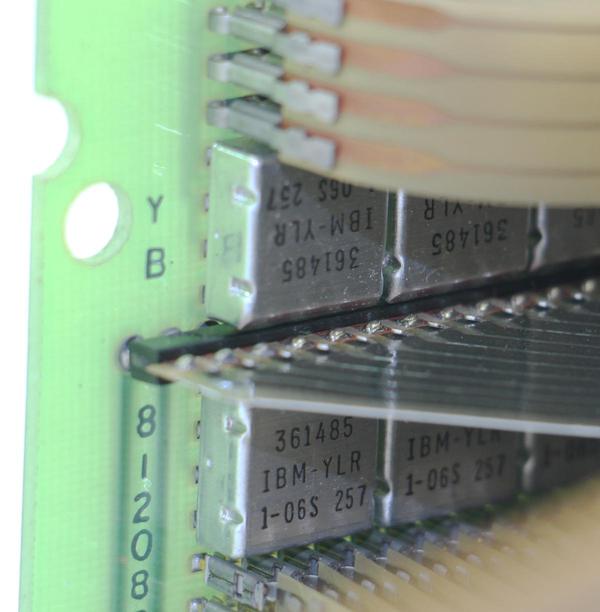

This closeup of the diode board shows the square metal SLT modules labeled 361485. Each one contains 8 diodes. The Mylar tape connections are at the top and bottom, while the "fin" in the middle is the wiring from the TROS module to the rest of the computer.

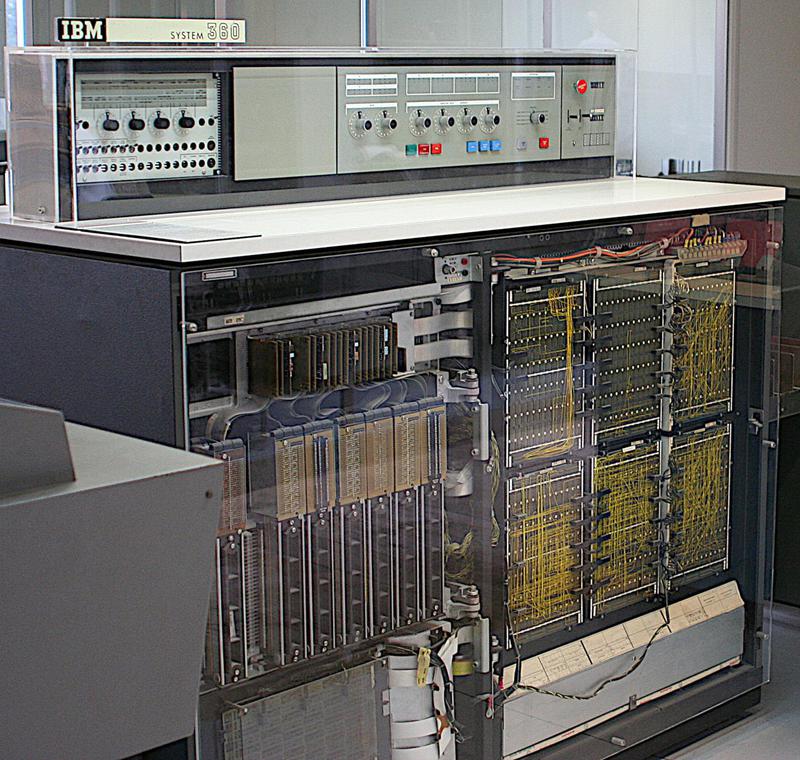

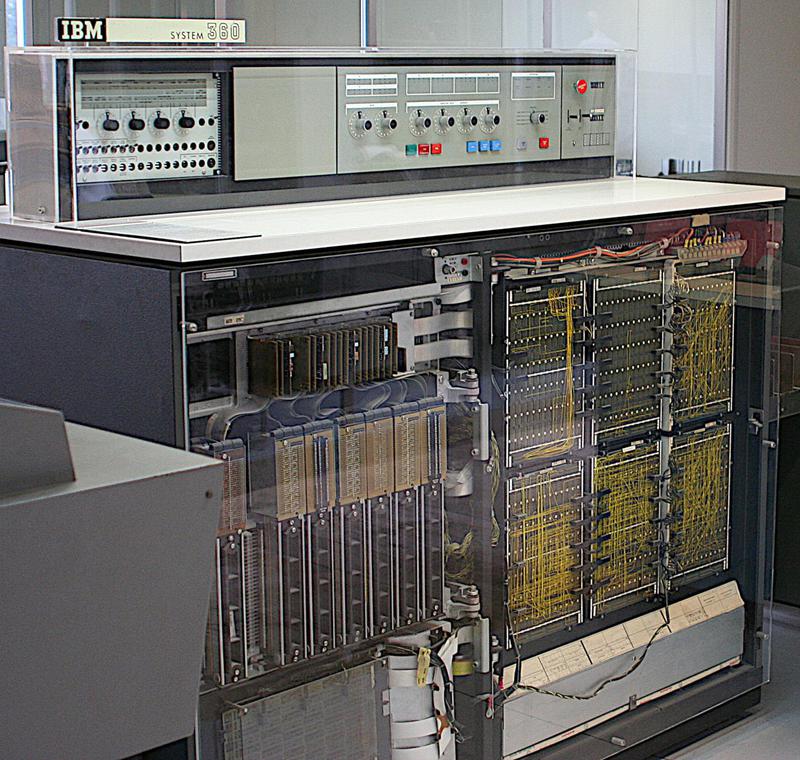

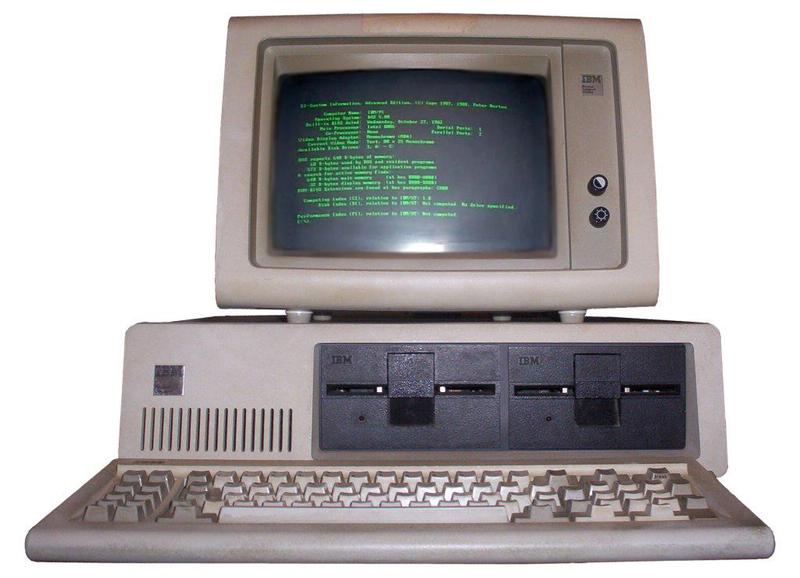

The TROS module I have was used on the low-end System/360 Model 20 computer, according to the label on it. The Model 20 was a slow, stripped-down system, lacking the full System/360 instruction set. Even so, its low cost ($1280 per month) made it the most popular System/360 model. The Model 20 contained 8 TROS modules, holding 6144 micro-instructions (3 micro-instructions per 60-bit word).3 These modules are visible on the left side of the computer below, mounted vertically. Note that the TROS modules take up a lot of space inside the computer.

IBM System/360 Model 20. TROS modules are on the left side. Photo from Ben Franske, CC BY 2.5.

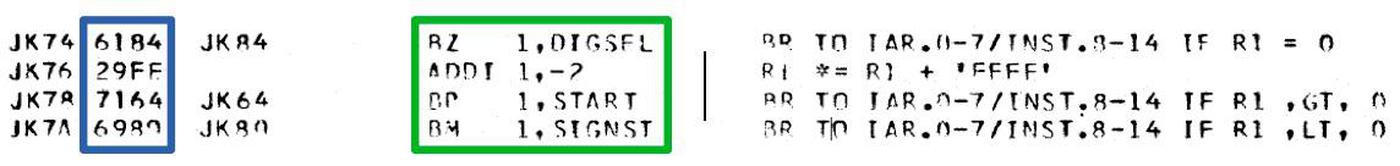

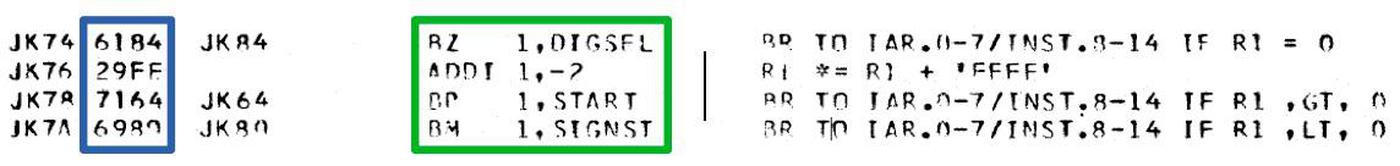

In case you're wondering what the Model 20 microcode looks like, a sample is below. The microcode itself (in hex) is highlighted in blue, with the mnemonic expansion in green. Comments are on the right. The Model 20's microcode is much simpler than the horizontal microcode in larger System/360 systems.4

Microcode from the System 360/20. The micro-operations in the code are "Branch if Zero", "Add Immediate", "Branch if Plus", and "Branch if Minus", all acting on register R1. From FEMDM vol 2.

Why microcode?

One of the hardest parts of computer design is creating the control logic that tells each part of the processor what to do to carry out each instruction. In 1951, Maurice Wilkes came up with the idea of microcode: instead of building the control logic from complex logic gate circuitry, the control logic could be replaced with code (i.e. microcode) stored in a special memory called a control store. To execute an instruction, the computer internally executes several simpler micro-instructions, which are specified by the microcode. With microcode, building the processor's control logic becomes a programming task instead of a logic design task.However, in the 1950s, storage technologies weren't fast and inexpensive enough to make microcode practical. It wasn't until the IBM System/360 (1964) that commercial computers made significant use of microcode. Microcode played a key role in the success of the System/360, helping IBM produce a line of computers with the same instruction set architecture but widely different implementations. Microcode also simplified backward compatibility, helping the System/360 support instruction sets of older IBM systems.5

IBM's various read-only storage techniques

IBM used several different read-only storage techniques to store microcode, for a combination of political and technical reasons. TROS was developed at IBM's Hursley site in England. This site started working on microcode because transistors were very expensive in England in the 1950s, and microcode could reduce the number of transistors required. Hursley developed a TROS for the SCAMP6 computer. This was followed by the TROS I've described, used on the System/360 Model 20 and Model 40, as well as the IBM 2841 file control unit.A competing type of read-only storage is CCROS (Capacitive Coupled Read-Only Storage), which used Mylar sheets that functioned as a matrix of capacitors. An interesting feature of CCROS is that the Mylar sheets had the same size as an IBM punch card so microcode could be programmed by punching holes in it with a standard keypunch. CCROS was developed at IBM's Endicott site. Because the System/360 Model 30 was developed there too, it used the locally-developed CCROS even though CCROS was slower and less reliable than TROS. Each CCROS card holds 12 60-bit words. The Model 30 had 42 CCROS boards, each holding 8 cards, for a total of 4032 60-bit words.

Detail of a CCROS sheet. It is programmed by punching holes in it with a keypunch.

The high-performance Models 50, 65 and 67 required a faster control store, so they used a third technology, BCROS (Balanced Capacitor Read-Only Storage). Like CCROS, BCROS read bits by sensing capacitance, but BCROS used two capacitors for each bit (the Balanced Capacitors), which helped reduce noise and increased speed. The Mylar sheets for BCROS were 20″×8½″, much larger than the TROS and CCROS sheets. The data in BCROS was etched into the copper wiring (below), rather than by punching holes. Each bit is represented by two squares: one connected to the upper wire and one connected to the lower wire (or vice versa), forming the balanced capacitors. Each sheet plane held 176 words of 100 bits, and the system used 16 sheets to provide 2816 words.

Closeup of a BCROS sheet from a System/360 Model 50.

Instead of using special technology to store microcode, the low-end Model 25 held microcode in a 16-kilobyte section of core memory called Control Storage. In this model, different microcode was loaded from a card deck or tape to switch operating modes between System/360 and emulation of the legacy IBM 1400 series.

An important feature of these storage technologies is that the microcode could be easily updated at customer sites, by swapping the Mylar sheets (or card deck) holding the microcode. Many system bugs could be fixed inexpensively by changing the microcode. (In comparison, an "engineering change" on the older IBM 1401 typically required the engineer to modify wiring on the backplane, much more time-consuming and error-prone.) Microcode could also be upgraded if the customer purchased a new feature.

Comparison with core rope

TROS has some similarities with the core rope storage used by the Apollo Guidance Computer (AGC) to store programs, since both stored read-only data in the pattern of wires through cores. The tradeoffs were different between core rope and TROS. The AGC's core ropes were much more dense than TROS, an important feature for space flight. However, TROS could be easily changed by replacing the plastic tapes, while modifying a core rope required an expensive 8-week manufacturing process to wire up a new module.

Detail of core rope memory wiring from an early (Block I) Apollo Guidance Computer. Photo from Raytheon.

TROS and core rope are structurally the opposite, reversing the roles of word (address) lines and sense lines. TROS data depended on which word lines went through or around the transformer, while core rope data depended on which sense lines went through or around a core. To read a word in the AGC, one core was activated, while in TROS all of the transformers were (potentially) activated. Each transformer in TROS had one sense line and was associated with one output bit. In contrast, each core in the AGC's core rope had 192 sense lines and was associated with 12 words. (I've written more on core rope here).

Conclusion

TROS and other read-only storage technologies were a key ingredient in the overwhelming success of the IBM System/360 because they made microcode practical. However, the arrival of cheap semiconductor ROMs in the 1970s obsoleted complex storage technologies such as TROS. Nowadays, most microprocessors still use microcode, but it's stored in ROM inside the chip instead of in sheets of Mylar. Microcode can now be patched by downloading a file, rather than replacing Mylar sheets inside the computer.7

The TROS module, showing the diode boards and the stack of 128 Mylar tapes.

I announce my latest blog posts on Twitter, so follow me @kenshirriff for future articles. I also have an RSS feed.

Notes and References

- The IBM System/360 was introduced in 1964. The date on this specific TROS module is May 27, 1970. ↩

- The diagram below illustrates how the matrix selection and diodes work. This diagram has been simplified to 2 drivers, 4 gates, and 8 word lines; the real system has 16 drivers, 16 gates, and 256 word lines. (What IBM calls a "gate" here is not a logic gate, but a current sink forming the other end of the circuit.) By energizing a particular driver and gate pair, a word line is selected. For instance, if driver 1 and gate 3 are energized, word line 3 is selected, as shown in red. Note that without the diodes, signals could go backward, incorrectly energizing multiple word lines.

↩

Matrix selection of a word line. Energizing driver DR1 and gate G3 selects word line W3. Based on Model 40 Functional Units, p61. - The Model 20 used 22-bit microcode words, so how did this work with 60-bit TROS? The trick was that some microcode words were truncated to 16 bits, so each TROS word held three microcode words: two 22-bit words, and one 16-bit word. In the Model 20's microcode, each word contained the address of the next microinstruction to execute. Since the truncated 16-bit word could only branch to a limited subset of next microinstructions. Thus, the microcode assembler had to carefully arrange the microcode so micro-instructions requiring a longer branch were stored in one of the longer 22-bit words. ↩

- A 90-bit micro-instruction in the Model 50 could perform half a dozen different functions in parallel. For example, each yellow box below is a single micro-instructions that is part of floating-point multiplications. Each line in the box is a separate action; the micro-instruction can control the emitter, adder, shifter, mover, and local storage in parallel. The point is that the Model 50 was faster (in part) because it had multiple functional units, and the microcode needed to be much more complicated to control them.

Two micro-instructions (in yellow) in the System/360 Model 50. This is part of the microcode to handle exponent underflow and overflow during floating-point multiplication. The black lines show control flow. The text outside the box is comments. From Model 50 diagram QG702

↩ - Most System/360 computers used microcode because it reduced cost, increased flexibility, and made development faster. IBM imposed a rule that System/360 computers had to be implemented in microcode unless there was a very good reason not to. The fastest models used hardwired control circuitry, though, to maximize performance. ↩

- Confusingly, IBM had two unrelated computers called SCAMP. The one using TROS is the Scientific Computer and Modulator Processor, a small computer developed at IBM Hursley for scientific applications, not the better-known prototype for the portable IBM 5100 (Special Computer APL Machine Portable). ↩

- Modern x86 chips have hardcoded microcode, along with some SRAM that holds microcode patches to fix processor flaws. The patches are downloaded into the processor by the BIOS (details) after each power-on. ↩

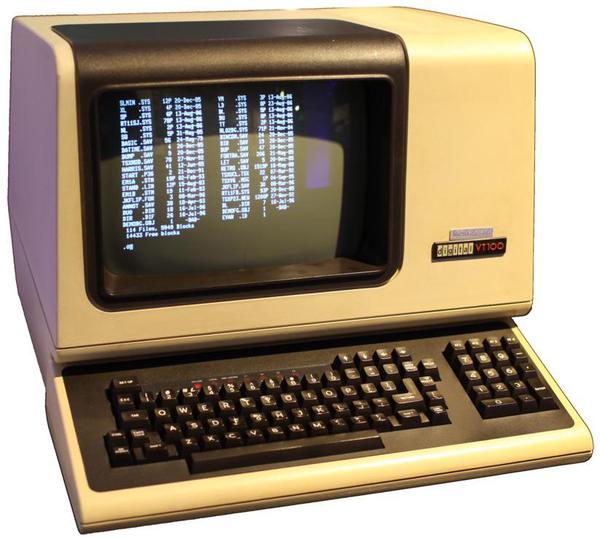

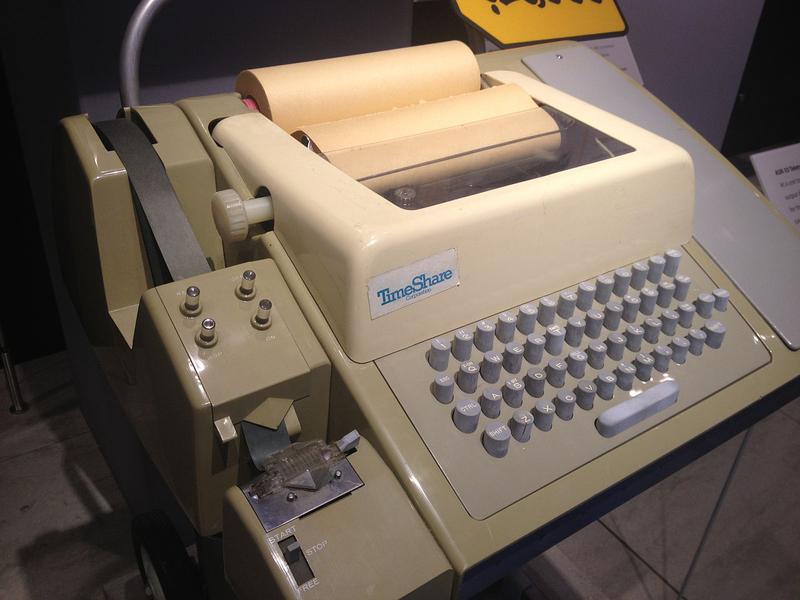

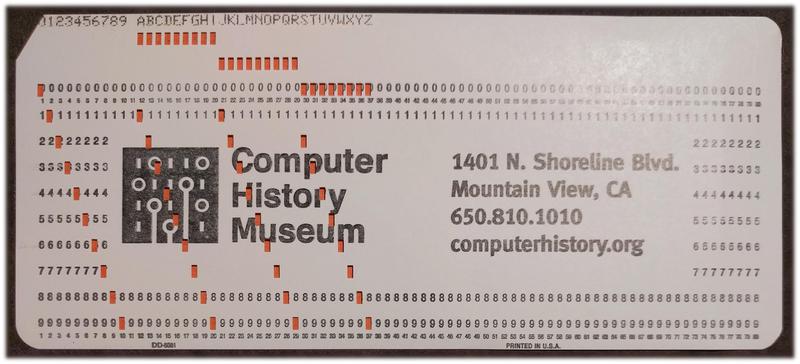

IBM, sonic delay lines, and the history of the 80×24 display

What explains the popularity of terminals with 80×24 and 80×25 displays? A recent blog post "80x25" motivated me to investigate this. The source of 80-column lines is clearly punch cards, as commonly claimed. But why 24 or 25 lines? There are many theories, but I found a simple answer: IBM, in particular its dominance of the terminal market. In 1971, IBM introduced a terminal with an 80×24 display (the 3270) and it soon became the best-selling terminal, forcing competing terminals to match its 80×24 size. The display for the IBM PC added one more line to its screen, making the 80×25 size standard in the PC world. The impact of these systems remains decades later: 80-character lines are still a standard, along with both 80×24 and 80×25 terminal windows.

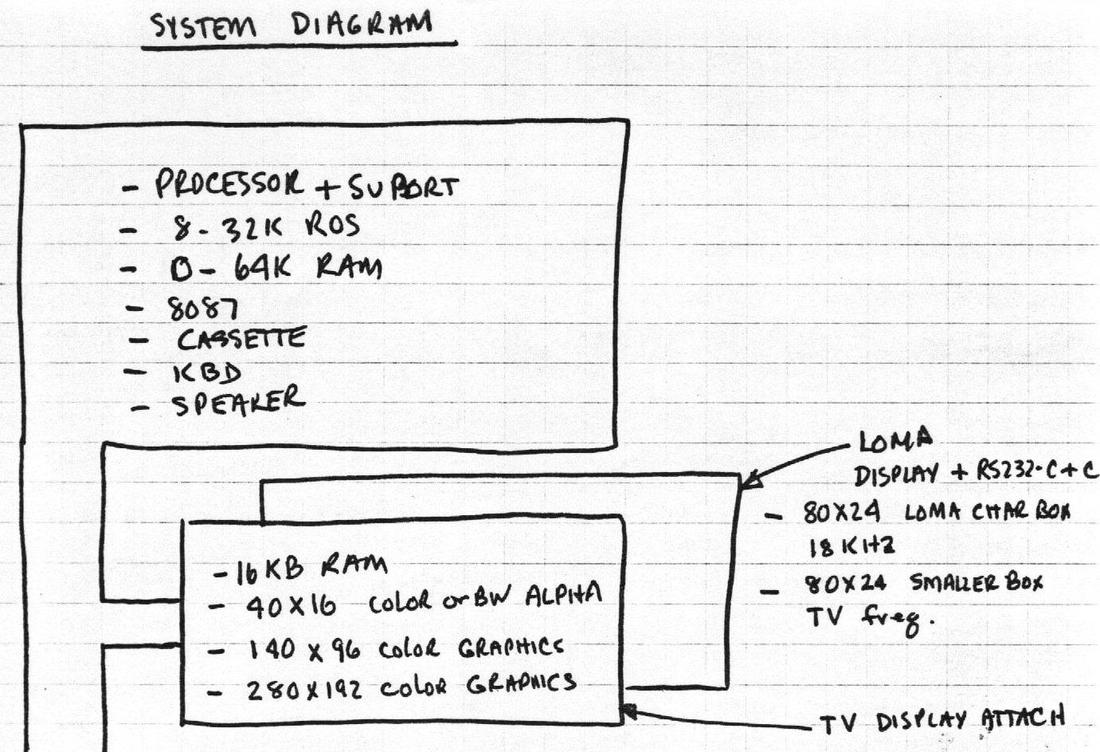

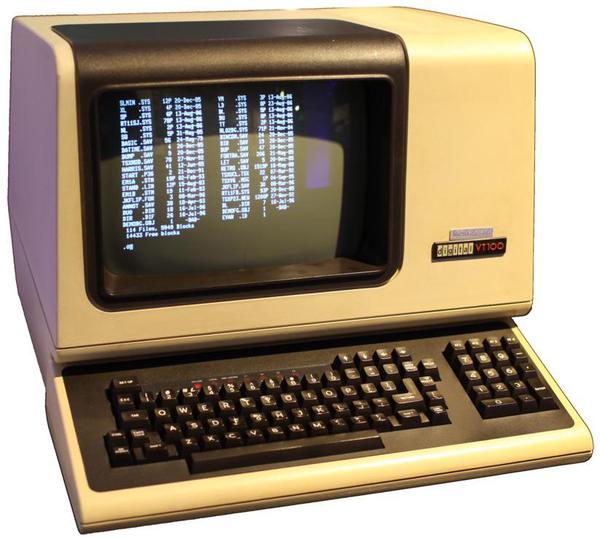

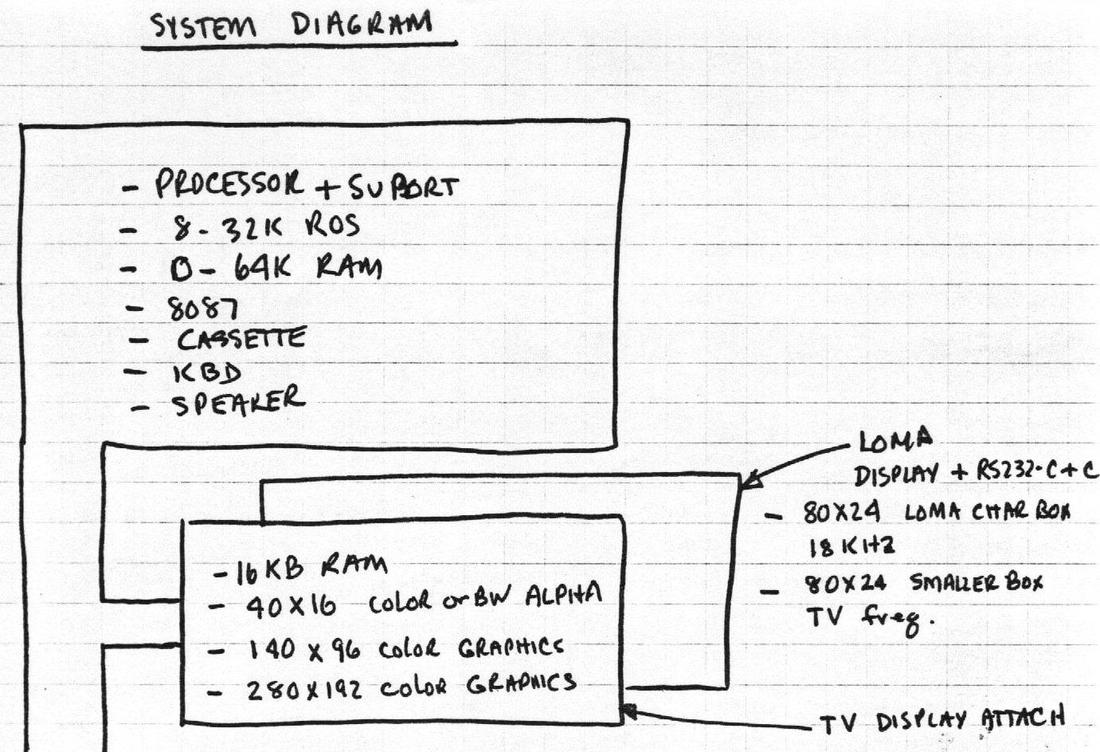

In this blog post, I'll discuss this history in detail, including some other systems that played key roles. The CRT terminal market essentially started with the IBM 2260 Display Station in 1965, built from curious technologies such as sonic delay lines. This led to the popular IBM 3270 display and then widespread, inexpensive terminals such as the DEC VT100. In 1981, IBM released a microcomputer called the DataMaster. While the DataMaster is mostly forgotten, it strongly influenced the IBM PC, including the display. This post also studies reports on the terminal market from the 1970s and 1980s; these make it clear that market forces, not technological forces, led to the popularity of various display sizes.

It's true that 80-column displays were motivated by punch cards4 and the VT100 became a standard,2 but the rest of this theory falls apart. The biggest problem with this theory is the VT100's display was 80×24, not 80×25.3 In addition, the VT100 used extra bytes of storage for each line, so the display memory did not fit into 2K. Finally, up until the 1980s, most displays were 80×24, not 80×25.

Other theories have been expressed on Software Engineering StackExchange and Retrocomputing StackExchange, arguing that 80×24 terminals resulted from technical reasons such as TV scan rates, aspect ratios, memory sizes, typography, the history of typewriters, and so forth. There is a fundamental problem with theories that 80×24 is an inevitable consequence of technology, though: terminals in the mid-1970s had dozens of diverse screen sizes such as 31×11, 42×24, 50×20, 52×48, 81×38, 100×50, and 133×64.11 This makes it clear that technological limitations didn't force terminals into a particular size. To the contrary, as technology improved, most of these sizes disappeared and terminals were largely 80×24 by the early 1980s. This illustrates that standardization was the key factor, not the technology.

I'll briefly summarize why technical factors don't have much impact on the terminal size. Although US televisions used 525 scan lines and 60 Hz refresh,9 40% of terminals used other values.6 The display frequency and bandwidth didn't motivate a particular display size because terminals generated characters with a wide variety of matrix sizes.8 Although memory cost was significant, DRAM chip sizes quadrupled every three years, making memory only a temporary constraint. The screen's aspect ratio wasn't a big factor because the text's aspect ratio often didn't match the screen's ratio.7 Of course technology had some influence, but it didn't stop early manufacturers from creating terminal sizes ranging from 32×8 to 133×64.

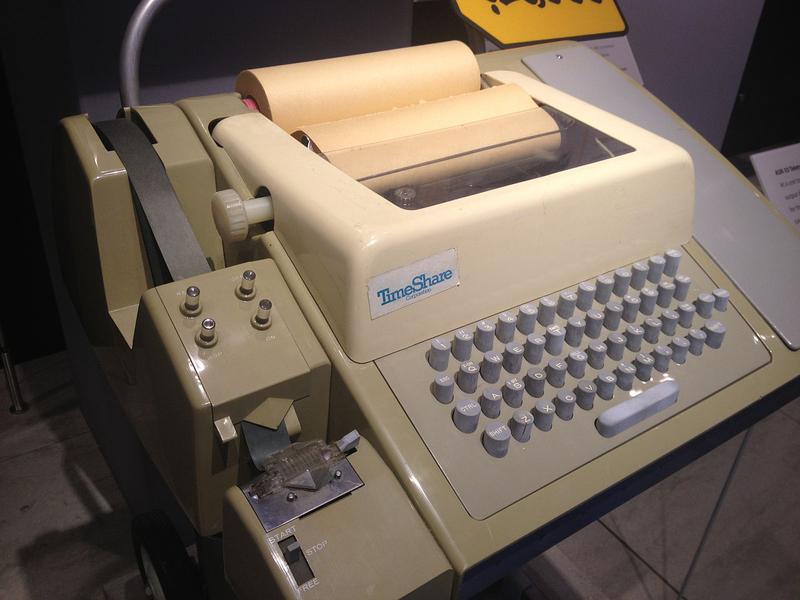

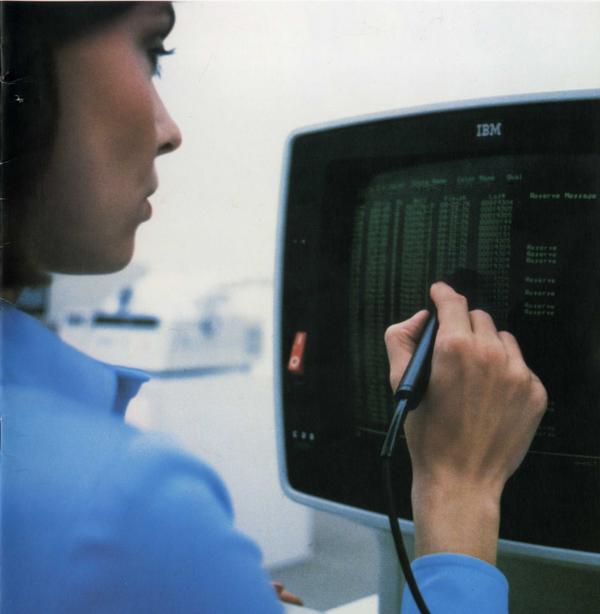

In the 1970s, replacing teleprinters with CRT terminals was a large and profitable market. AT&T introduced the Teletype Model 40 in 1973, a CRT terminal with an 80×24 display.12 Many other companies introduced competing CRT terminals, and "Teletype-compatible" became a market segment. By 198111 these terminals were being used in many roles besides replacing teleprinters and the name shifted to "ASCII terminals". By 1985, CRT terminals were a huge success with 10 million terminals installed in the US.

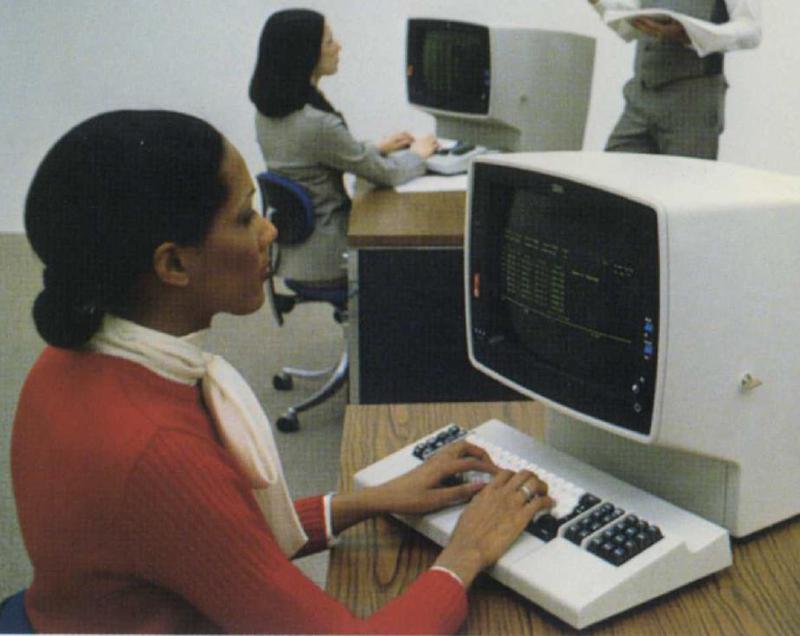

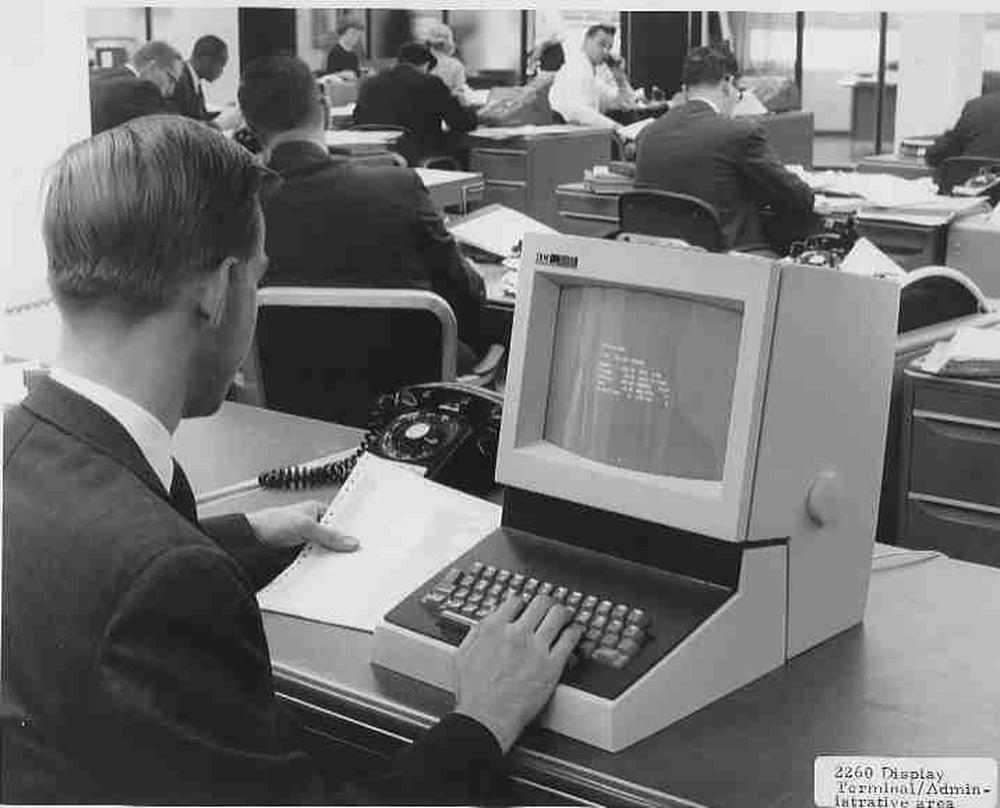

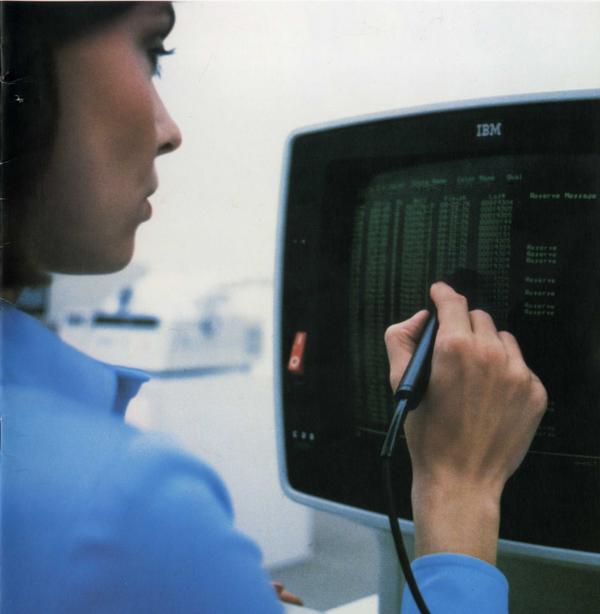

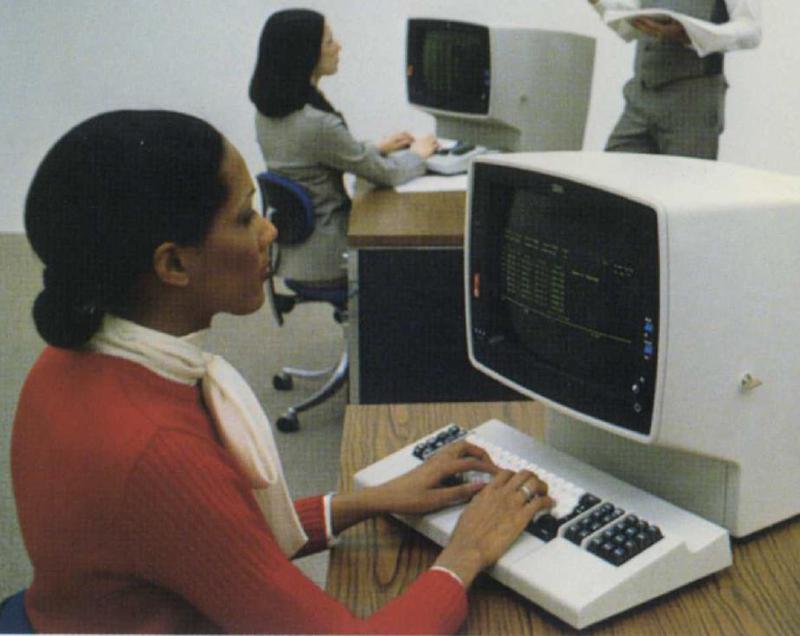

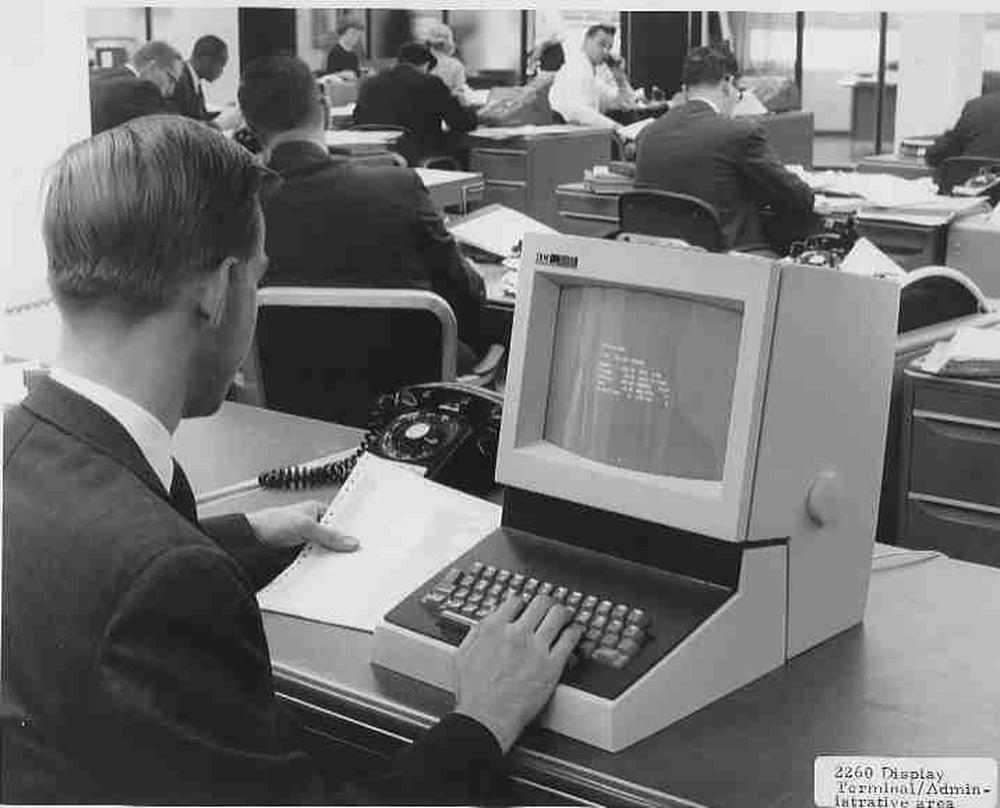

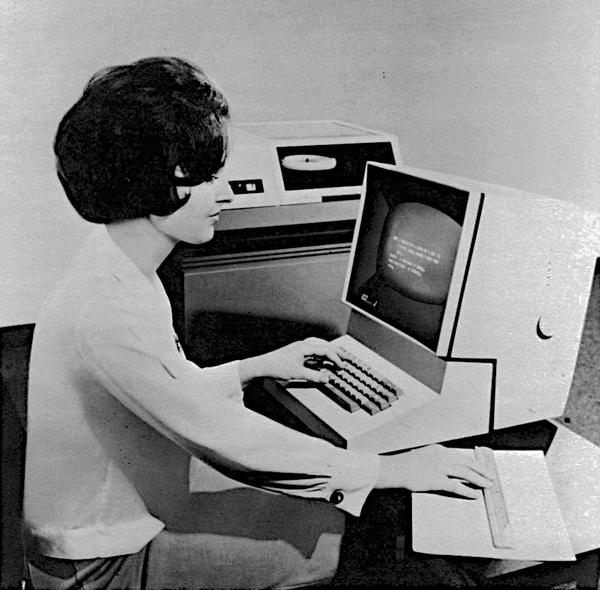

But there's a parallel world of mainframe terminals, a world that may be unfamiliar to many readers. In 1965, IBM introduced the IBM 2260 Display Terminal, which placed IBM's "stamp of approval" on the CRT terminal, which had previously been "somewhat of a novelty."6 This terminal dominated the market until IBM replaced it with the cheaper and more advanced IBM 3270 terminal in 1971. Unlike asynchronous ASCII terminals that transmitted individual keystrokes, these terminals were block oriented, efficiently exchanging large blocks of characters with a mainframe. The 3270 terminal was fairly "intelligent": a 3270 user could fill in labeled fields on the screen, and then transmit all the data at once by pressing the "Enter" key. (This is why modern keyboards often still have the "Enter" key.) Sending a block of data was more efficient than sending each keystroke to the computer, and allowed mainframes to support hundreds of terminals. In the next sections, I'll discuss the 2260 and 3270 terminals in detail.

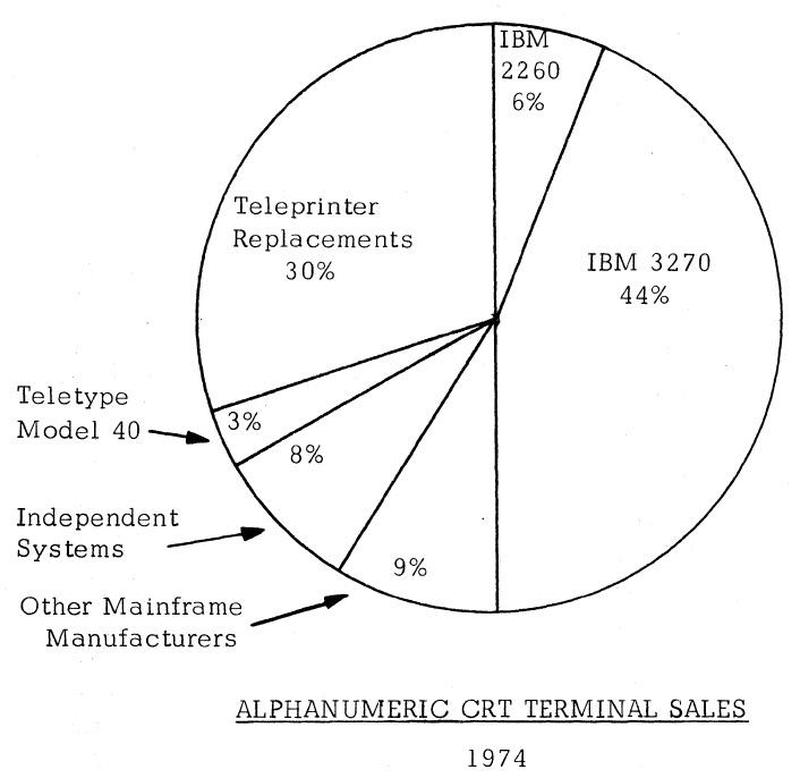

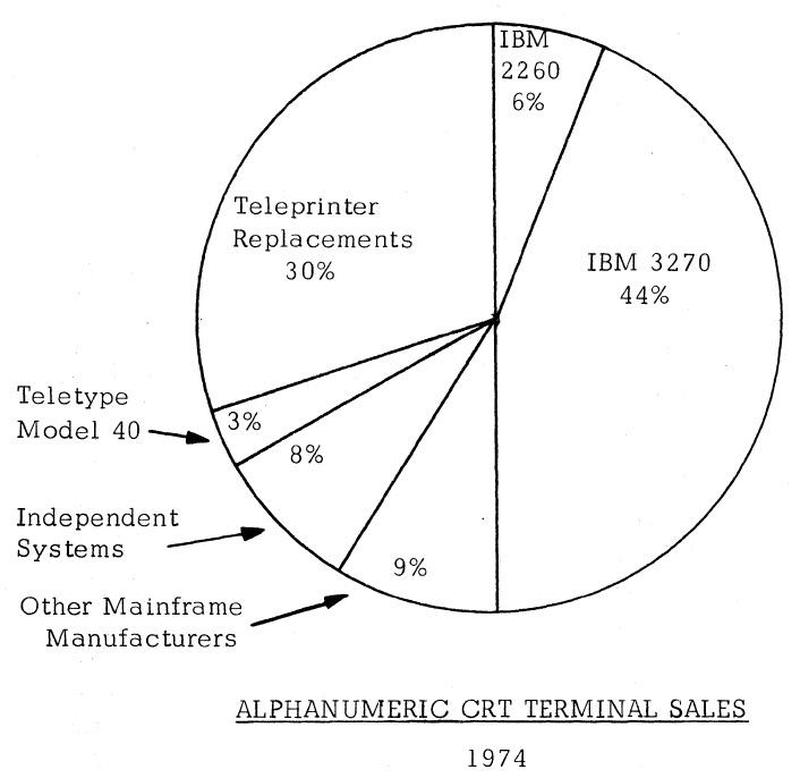

The chart below6 shows how the terminal market looked in 1974. The market was ruled by IBM's 3270 terminal, which had obsoleted IBM's 2260 terminal by this point. With 50% of the market, IBM essentially defined the characteristics of a CRT terminal. Teleprinter replacement was a large and influenetial market; the Teletype Model 40 was small but growing in importance. Although DEC would soon be a major player, it was in the small "Independent Systems" slice at this point.

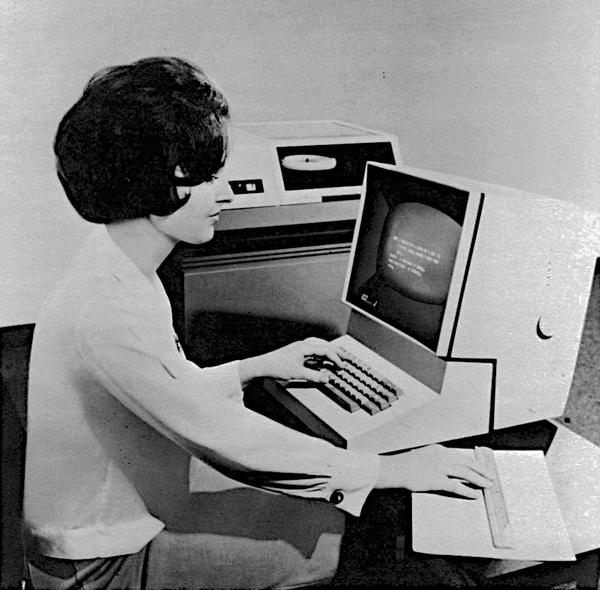

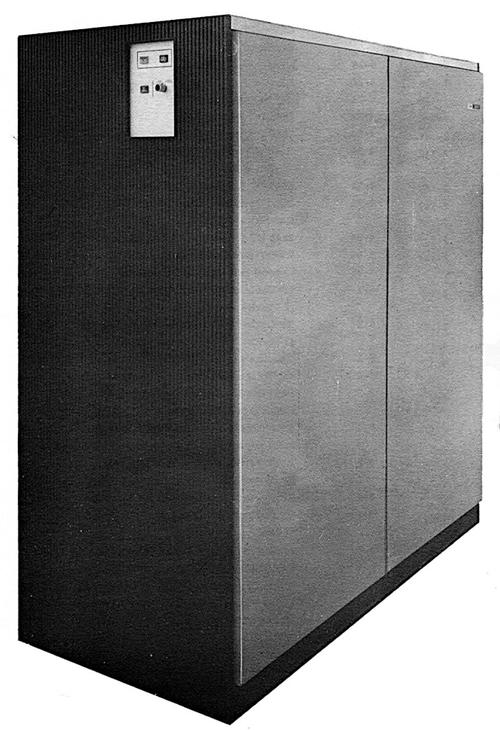

You might wonder how IBM could produce such a compact terminal with 1965 technology. The trick was that the terminal held just the keyboard and CRT display; all the control logic, character generation, storage, and interfacing was in a massive 1000 pound cabinet (below).15 This cabinet contained the circuitry to handle up to 24 display terminals. It generated the pixels for these terminals and send video signals to the terminals, which could be up to 2000 feet away.

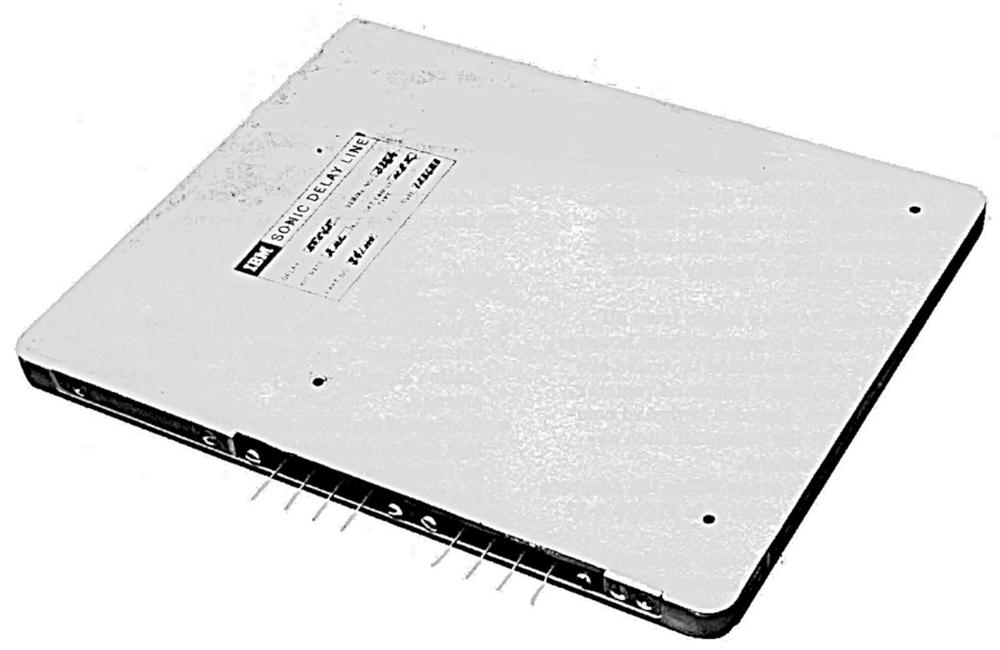

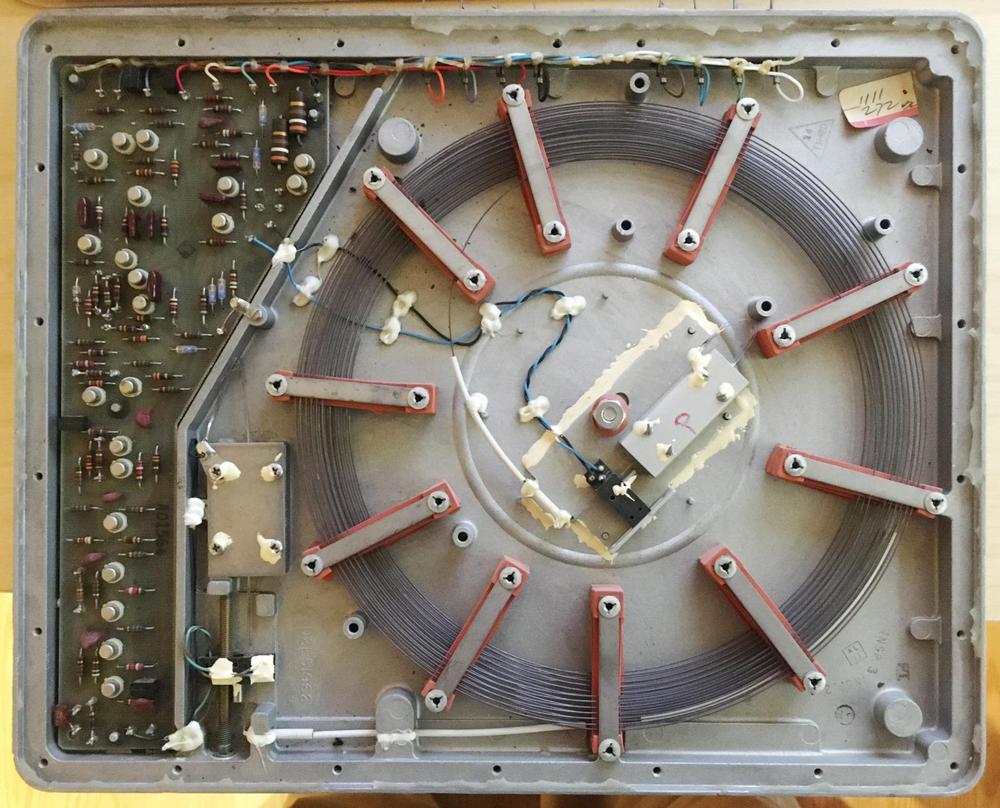

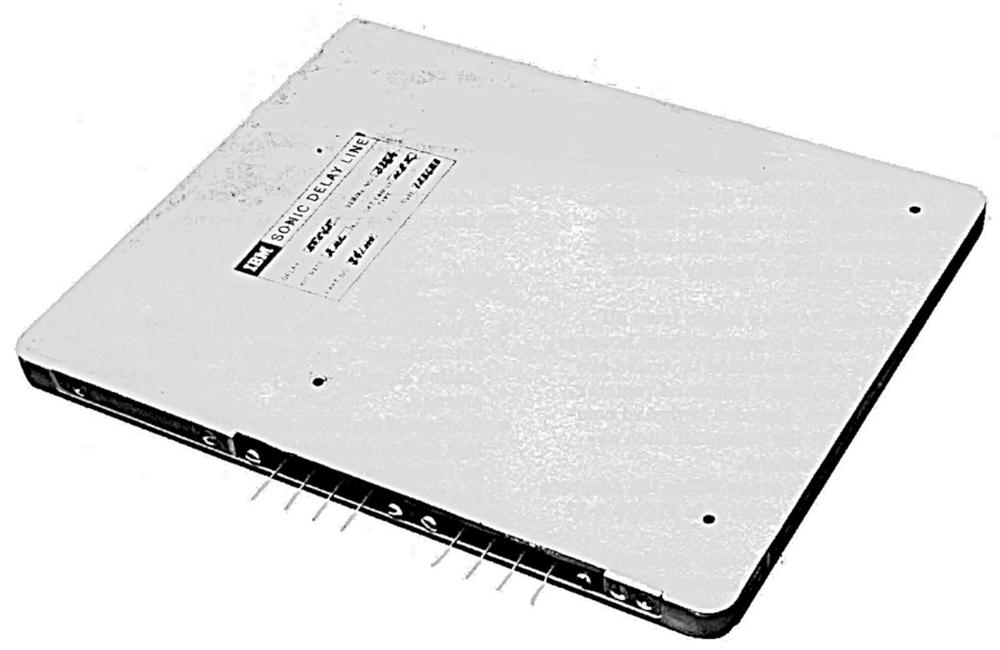

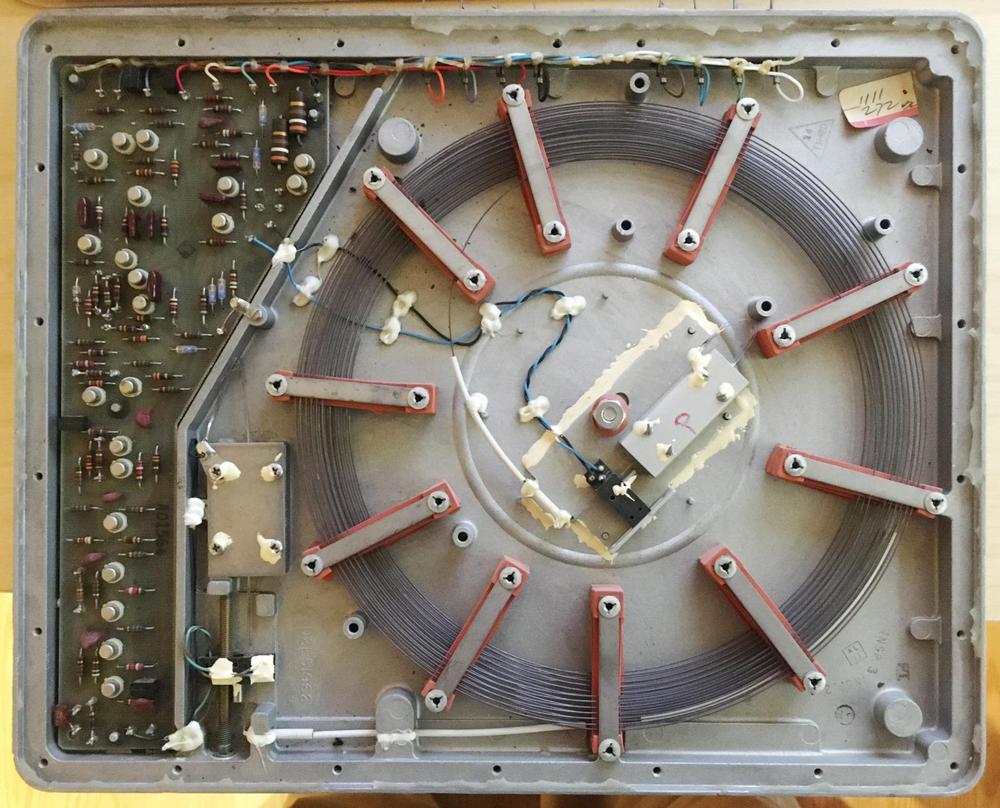

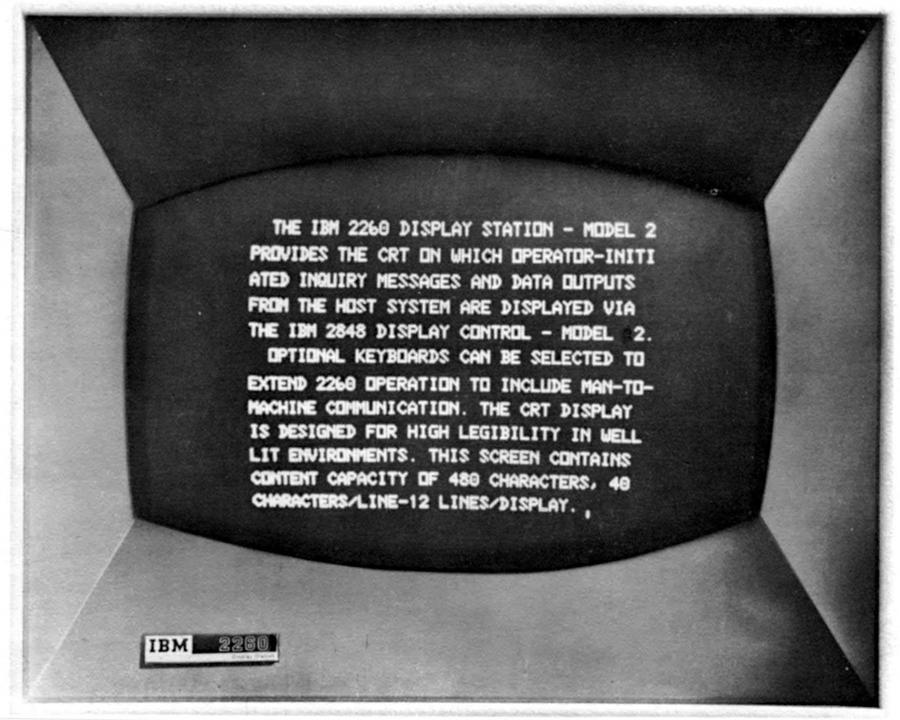

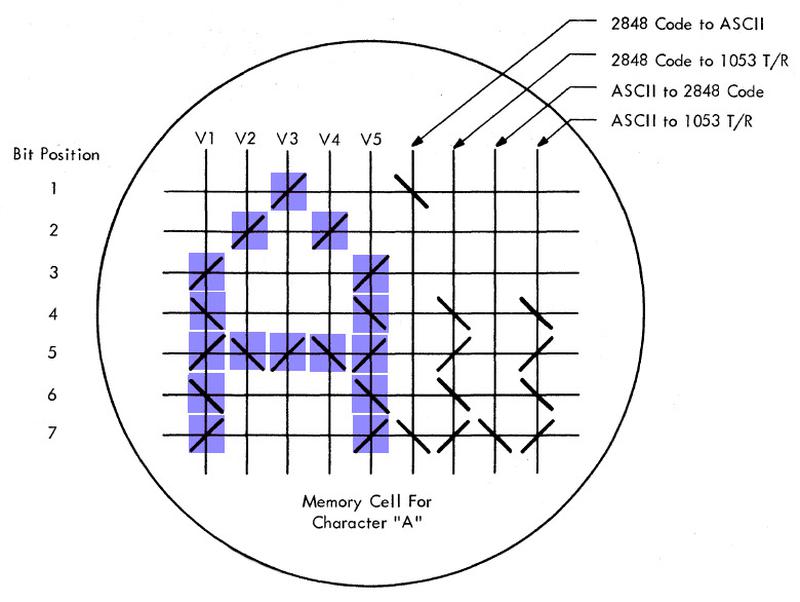

One of the most interesting features of the 2260 is the sonic delay lines used for pixel storage. Bits were stored as sound pulses sent into a nickel wire, about 50 feet long. The pulses traveled through the wire and came out the other end exactly 5.5545 milliseconds later. By sending a pulse (or not sending a pulse for a 0) every 500 nanoseconds, the wire held 11,008 bits. A pair of wires created a buffer that held the pixels for 480 characters.16

The sonic delay line had several problems. First, you had to constantly refresh the data: as bits came out one end of the wire, you had to feed them back in the other end. Second, the delay line was not random access: if you wanted to update a character, you needed to wait several milliseconds for those bits to circulate. Third, the delay line was sensitive to vibration; Wikipedia says that heavy footsteps could mess up the screen. Fourth, the delay line speed was sensitive to temperature changes; it needed to warm up for two hours in a temperature-controlled cabinet before use. With all these disadvantages, you might wonder why sonic delay lines were used. The main reason was they were much cheaper than core memory. The serial nature of a delay line was also a good match to the serial nature of a raster-scan display.

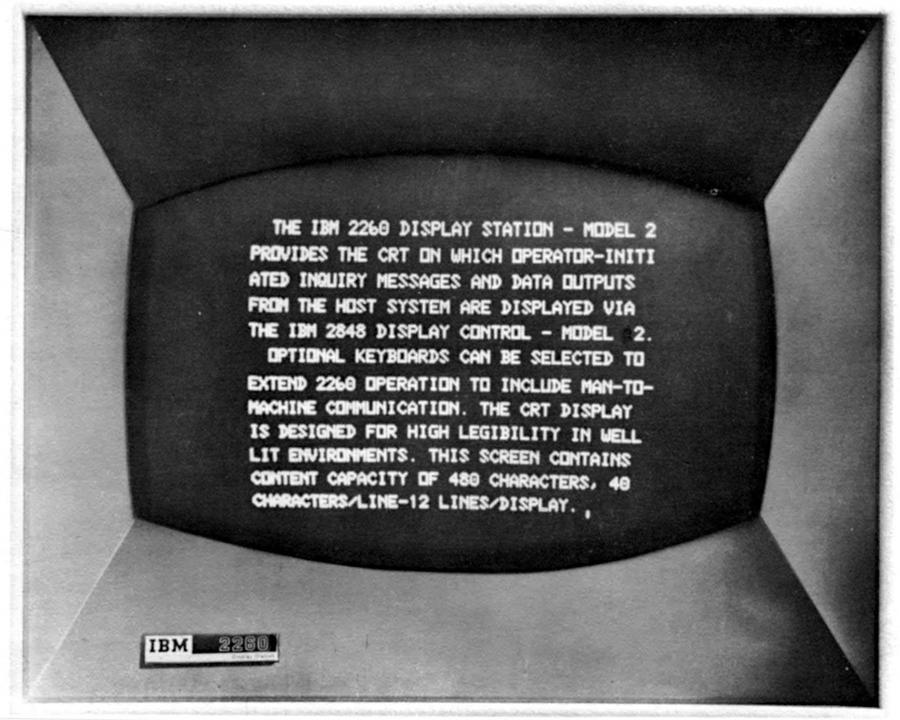

The image below shows the screen of the 2260 Model 2, with 12 lines of 40 characters. (The Model 1 had 6 lines of 40 characters and the Model 3 had 12 lines of 80 characters.) Notice that the lines are double-spaced; this is because the control unit actually generated 24 lines of text but alternating lines went to two different terminals.20 This is a very strange approach, but it split the high cost of the control hardware across two terminals.19 Another strange characteristic was that the 2260's scan lines were vertical, unlike the horizontal scan lines in almost every video display and television.21

Each character was represented in 6-bit EBCDIC, giving a character set of 64 characters (no lower-case). 18 The delay lines stored the pixels to be displayed, but they also stored the EBCDIC code for each character. The trick here is the blank column of pixels between each character for horizontal spacing between characters. The system used this column to store the BCD character value but blanked the display during this column so the BCD value didn't show up as pixels on the screen. This allowed the 6-bit character value to be stored essentially for free.

The relevant question is why did the 2260 have a display with 12 lines of 80 characters?2324 The 80-character width allowed the terminals to take the place of 80-column punch cards for data entry. (In the 40-character models, a card would be split across two lines.) As for the 12 lines, that appears to be what the delay lines could support without flicker.22